ChatGPT for Self-Diagnosis: AI Is Changing the Way We Answer Our Own Health Questions

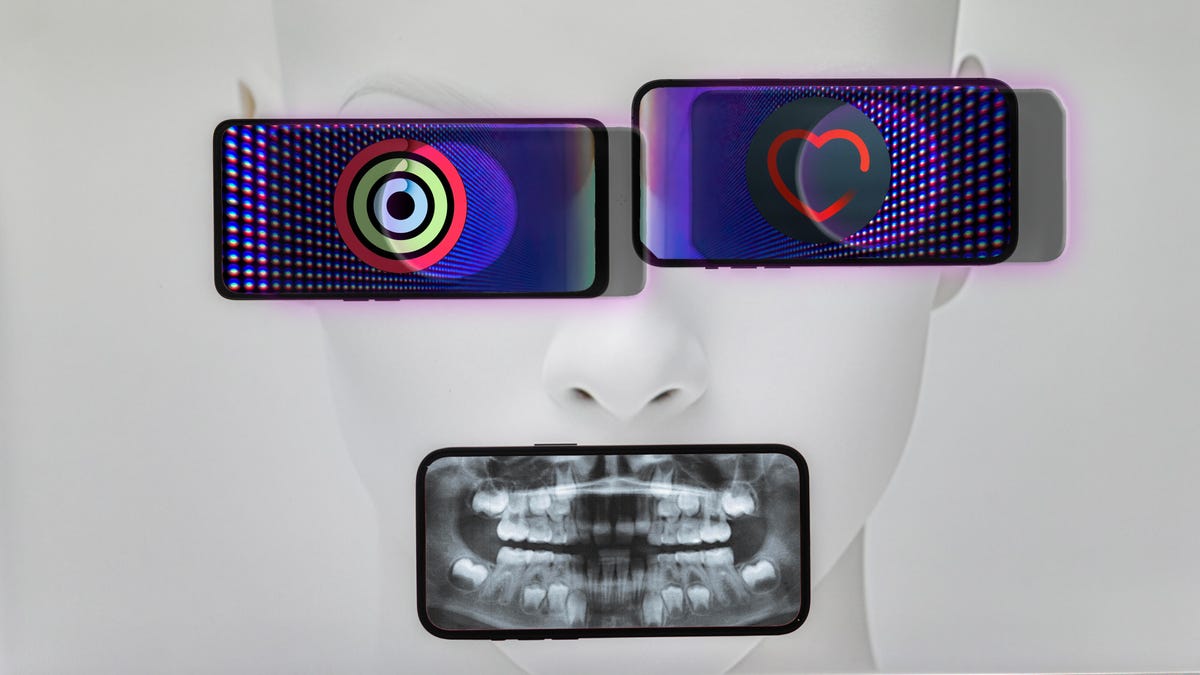

For better and for worse, AI gives us a new way to take symptom-checking into our own hands. For those with chronic health conditions, it's a new tool.

Katie Sarvela was sitting in her bedroom in Nikiksi, Alaska, on top of a moose-and-bear-themed bedspread, when she entered some of her earliest symptoms into ChatGPT.

The ones she remembers describing to the chatbot include half of her face feeling like it's on fire, then sometimes being numb, her skin feeling wet when it's not wet and night blindness.

ChatGPT's synopsis?

"Of course it gave me the 'I'm not a doctor, I can't diagnose you,'" Sarvela said. But then: multiple sclerosis. An autoimmune disease that attacks the central nervous system.

Katie Sarvela.

Now 32, Sarvela started experiencing MS symptoms when she was in her early 20s. She gradually came to suspect it was MS, but she still needed another MRI and lumbar puncture to confirm what she and her doctor suspected. While it wasn't a diagnosis, the way ChatGPT jumped to the right conclusion amazed her and her neurologist, according to Sarvela.

ChatGPT is an AI-powered chatbot that scrapes the internet for information and then organizes it based on which questions you ask, all served up in a conversational tone. It set off a profusion of generative AI tools throughout 2023, and the version based on the GPT-3.5 large language model is available to everyone for free. The way it can quickly synthesize information and personalize results raises the precedent set by "Dr. Google," the researcher's term describing the act of people looking up their symptoms online before they see a doctor. More often we call it "self-diagnosing."

For people like Sarvela, who've lived for years with mysterious symptoms before getting a proper diagnosis, having a more personalized search to bounce ideas off of may help save precious time in a health care system where long wait times, medical gaslighting, potential biases in care, and communication gaps between doctor and patient lead to years of frustration.

But giving a tool or new technology (like this magic mirror or any of the other AI tools that came out of this year's CES) any degree of power over your health has risks. A big limitation of ChatGPT, in particular, is the chance that the information it presents is made up (the term used in AI circles is a "hallucination"), which could have dangerous consequences if you take it as medical advice without consulting a doctor. But according to Dr. Karim Hanna, chief of family medicine at Tampa General Hospital and program director of the family medicine residency program at the University of South Florida, there's no contest between the power of ChatGPT and Google search when it comes to diagnostic power. He's teaching residents how to use ChatGPT as a tool. And though it won't replace the need for doctors, he thinks chatbots are something patients could be using too.

"Patients have been using Google for a long time," Hanna said. "Google is a search."

"This," he said, meaning ChatGPT, "is so much more than a search."

Is 'self-diagnosing' actually bad?

There's a list of caveats to keep in mind when you go down the rabbit hole of Googling a new pain, rash, symptom or condition you saw in a social media video. Or, now, popping symptoms into ChatGPT.

The first is that all health information is not created equal — there's a difference between information published by a primary medical source like Johns Hopkins and someone's YouTube channel, for example. Another is the possibility you could develop "cyberchondria," or anxiety over finding information that's not helpful, for instance diagnosing yourself with a brain tumor when your head pain is more likely from dehydration or a cluster headache.

Arguably the biggest caveat would be the risk of false reassurance fake information. You might overlook something serious because you searched online and came to the conclusion that it's no big deal, without ever consulting a real doctor. Importantly, "self-diagnosing" yourself with a mental health condition may bring up even more limitations, given the inherent difficulty of translating mental processes or subjective experiences into a treatable health condition. And taking something as sensitive as medication information from ChatGPT, with the caveat chatbots hallucinate, could be particularly dangerous.

But all that being said, consulting Dr. Google (or ChatGPT) for general information isn't necessarily a bad thing, especially when you consider that being better informed about your health is largely a good thing — as long as you don't stop at a simple internet search. In fact, researchers from Europe in 2017 found that of people who reported searching online before their doctor appointment, about half still went to the doctor. And the more frequently people consulted the internet for specific complaints, the more likely they reported reassurance.

A 2022 survey from PocketHealth, a medical imaging sharing platform, found that people who are what they refer to as "informed patients" in the survey get their health information from a variety of sources: doctors, the internet, articles and online communities. About 83% of these patients reported relying on their doctor, and roughly 74% reported relying on internet research. The survey was small and limited to PocketHealth customers, but it suggests multiple streams of information can coexist.

Lindsay Allen, a health economist and health services researcher with Northwestern University, said in an email that the internet "democratizes" medical information, but that it can also lead to anxiety and misinformation.

"Patients often decide whether to visit urgent care, the ER, or wait for a doctor based on online information," Allen said. "This self-triage can save time and reduce ER visits but risks misdiagnosis and underestimating serious conditions."

Read more: AI Chatbots Are Here to Stay. Learn How They Can Work for You

An example of a question you could ask ChatGPT before your next doctor's appointment. Specifying your age, sex, preexsiting health condition or anything specific in your back-and-forth with the chatbot will make its suggestions more useful.

How are doctors using AI?

Research published in the Journal of Medical Internet Research looked at how accurate ChatGPT was at "self-diagnosing" five different orthopedic conditions (carpal tunnel and a few others). It found that the chatbot was "inconsistent" in its diagnoses, and over a five-day period of interpreting the questions researchers put into it, it got carpal tunnel right every time, but the more rare cervical myelopathy only 4% of the time. It also wasn't consistent day to day with the same question, meaning you run the risk of getting a different answer to the same problem you come to a chatbot about. Authors of the study reasoned that ChatGPT is a "potential first step" for health care, but that it can't be considered a reliable source of an accurate diagnosis.

Results from a study published this month in JAMA Pediatrics found that ChatGPT 3.5 gave the wrong diagnosis for pediatric cases the majority of the time. While ChatGPT did correctly identify the affected organ system in more than half of its misdiagnoses, it wasn't specific enough and missed connections doctors are typically able able to see, underscoring the "invaluable role" of clinical experience, the study's authors wrote.

This sums up the opinion of the doctors we spoke with, who see value in ChatGPT as a complementing diagnostic tool, rather than a replacement for doctors or a real diagnosis. One of them is Hanna, who teaches his residents when to call on ChatGPT. He says the chatbot assists doctors with differential diagnoses, which are vague complaints with way more than one potential cause. Think stomach aches and headaches.

When using ChatGPT for a differential diagnosis, Hanna will start by getting the patient's storyline and their lab results and then throw it all into ChatGPT. (He currently uses 4.0 but has used versions 3 and 3.5. He's also not the only one asking future doctors to get their hands on it.)

But actually getting a diagnosis may only be one part of the problem, according to Dr. Kaushal Kulkarni, an ophthalmologist and co-founder of a company that uses AI to analyze medical records. He says he uses GPT-4 in complex cases where he has a "working diagnosis," and he wants to see up-to-date treatment guidelines and the latest research available. An example of a recent search: "What is the risk of hearing damage with Tepezza for patients with thyroid eye disease?" But he sees more AI power in what happens before and after the diagnosis.

"My feeling is that many non-clinicians think that diagnosing patients is the problem that will be solved by AI," Kulkarni said in an email. "In reality, making the diagnosis is usually the easy part."

Using ChatGPT could help you communicate with your doctor

Two years ago, Andoeni Ruezga was diagnosed with endometriosis — a condition where uterine tissue grows outside the uterus and often causes pain and excess bleeding, and one that's notoriously difficult to identify. She thought she understood where, exactly, the adhesions were growing in her body — until she didn't.

So Ruezga contacted her doctor's office to have them send her the paperwork of her diagnosis, copy-pasted all of it into ChatGPT and asked the chatbot (Ruezga uses GPT-4) to "read this diagnosis of endometriosis and put it in simple words for a patient to understand."

Based on what the chatbot spit out, she was able to break down a diagnosis of endometriosis and adenomyosis.

Andoeni Ruezga.

"I'm not trying to blame doctors at all," Ruezga explained in a TikTok. "But we're at a point where the language barrier between medical professionals and regular people is very high."

In addition to using ChatGPT to explain an existing condition, like Ruezga did, arguably the best way to use ChatGPT as a "regular person" without a medical degree or training is to make it help you find the right questions to ask, according to the different medical experts we spoke with for this story.

Dr. Ethan Goh, a physician and AI researcher at Stanford Medicine in California, said that patients may benefit from using ChatGPT (or similar AI tools) to help them frame what many doctors know as the ICE method: identifying ideas about what you think is going on, expressing your concerns and then making sure you and your doctor hit your expectations for your visit.

For example, if you had high blood pressure during your last doctor visit and have been monitoring it at home and it's still high, you could ask ChatGPT "how to use the ICE method if I have high blood pressure."

As a primary care doctor, Hanna also wants people to be using ChatGPT as a tool to narrow down questions to ask their doctor — specifically, to make sure they're on track to the right preventive care, including using it as a resource to check in on which screenings they might be due for. But even as optimistic as Hanna is in bringing ChatGPT in as a new tool, there are limitations for interpreting even the best ChatGPT answers. For one, treatment and management is highly specific to an individual patient, and it won't replace the need for treatment plans from humans.

"Safety is important," Hanna said of patients using a chatbot. "Even if they get the right answer out of the machine, out of the chat, it doesn't mean that it's the best thing."

Read more: AI Is Dominating CES. You Can Blame ChatGPT for That

Two of ChatGPT's big problems: Showing its sources and making stuff up

So far, we've mostly talked about the benefits of using ChatGPT as a tool to navigate a thorny health care system. But it has a dark side too.

When a person or published article is wrong and tries to tell you they're not, we call that misinformation. When ChatGPT does it, we call it hallucinations. And when it comes to your health care, that's a big deal and something to remember it's capable of.

According to one study from this summer published in JAMA Ophthalmology, chatbots may be especially prone to hallucinating fake references — in ophthalmology scientific abstracts generated by chatbots in the study, 30% of references were hallucinated.

What's more, we might be letting ChatGPT off the hook when we say it's "hallucinating," schizophrenia researcher Dr. Robin Emsley wrote in an editorial for Nature. When toying with ChatGPT and asking it research questions, basic questions about methodology were answered well, and many reliable sources were produced. Until they weren't. Cross-referencing research on his own, Emsley said that the chatbot was inappropriately or falsely attributing research.

"The problem therefore goes beyond just creating false references," Emsley wrote. "It includes falsely reporting the content of genuine publications."

Misdiagnosis can be a lifelong problem. Can AI help?

When Sheila Wall had the wrong ovary removed about 40 years ago, it was just one experience in a long line of instances of being burned by the medical system. (One ovary had a bad cyst; the other was removed in the US, where she was living at the time. To get the right one removed, she had to go back up to Alberta, Canada, where she still lives today.)

Sheila Wall.

Wall has multiple health conditions ("about 12," by her account), but the one causing most of her problems is lupus, which she was diagnosed with at age 21 after years of being told "you just need a nap," she explained with a laugh.

Wall is the admin of the online group "Years of Misdiagnosed or Undiagnosed Medical Conditions," where people go to share odd new symptoms, research they've found to help narrow down their health problems, and use each other as a resource on what to do next. Most people in the group, by Wall's estimate, have dealt with medical gaslighting, or being disbelieved or dismissed by a doctor. Most also know where to go for research, because they have to, Wall said.

"Being undiagnosed is a miserable situation, and people need somewhere to talk about it and get information," she explained. Living with a health condition that hasn't been properly treated or diagnosed forces people to be more "medically savvy," Wall added.

"We've had to do the research ourselves," she said. These days, Wall does some of that research on ChatGPT. She finds it easier than a regular internet search because you can type questions related to lupus ("If it's not lupus…" or "Can … happen with lupus?") instead of having to retype, because the chatbot saves conversations.

According to one estimate, 30 million people in the US are living with an undiagnosed disease. People who've lived for years with a health problem and no real answers may benefit most from new tools that allow doctors more access to information on complicated patient cases.

How to use AI at your next doctor's appointment

Based on the advice of the doctors we spoke with, below are some examples of how you can use ChatGPT in preparation for your next doctor's appointment. That is, using ChatGPT as a conversation-starter and tool to help narrow down your health concerns. The first example, laid out below, uses the ICE method for patients who've lived with chronic illness.

ChatGPT 3.5's advice on discussing your ideas, concerns and expectations -- called the ICE method -- with a doctor, under the premise you're living with a chronic undiagnosed illness.

You can ask ChatGPT to help you prepare for conversations you want to have with your doctor, or to learn more about alternative treatments — just remember to be specific, and to think of the chatbot as a sounding board for questions that often slip your mind or you feel hesitant to bring up.

"I am a 50-year-old woman with prediabetes and I feel like my doctor never has time for my questions. How should I address these concerns at my next appointment?"

"I'm 30 years old, have a family history of heart disease and am worried about my risk as I get older. What preventive measures should I ask my doctor about?"

"The anti-anxiety medication I was prescribed isn't helping. What other therapies or medications should I ask my doctor about?"

Even with its limitations, having a chatbot available as an additional tool may save a little energy when you need it most. Sarvela, for example, would've gotten her MS diagnosis with or without ChatGPT — it was all but official when she punched in her symptoms. But living as a homesteader with her husband, two children, and a farm of ducks, rabbits and chickens, she doesn't always have the luxury of "eventually."

In her Instagram bio is the word "spoonie" — an insider term for people who live with chronic pain or disability, as described in "spoon theory." The theory goes something like this: People with chronic illness start out with the same number of spoons each morning, but lose more of them throughout the day because of the amount of energy they have to expend. For example, making coffee might cost one person one spoon, but someone with chronic illness two spoons. An unproductive doctor's visit might cost five spoons.

In the years ahead, we'll be watching to see how many spoons new technologies like ChatGPT may save those who need them most.

Editors' note: CNET is using an AI engine to help create some stories. For more, see this post.