Why You Can Trust CNET

Why You Can Trust CNET How HDMI 2.1 makes big-screen 4K PC gaming even more awesome

There's a new HDMI standard coming out, with a feature called Game Mode VRR that combats motion blur and input lag. Here's how it supercharges the future of gaming.

An open secret on the PC gaming world is that 4K TVs double as great PC monitors. Or potentially great, anyway. Big-screen gamers have lived with many a compromise, if only to play Overwatch or Battlefield on a 65-inch display at resolutions that living room game consoles can still only dream about.

But now, thanks to the new HDMI 2.1 specification, future TVs could eliminate -- or at least diminish -- many of those compromises. The greater bandwidth possible with 2.1 means higher resolutions and higher framerates, but that's not all. The Game Mode VRR feature could potentially improve input lag, bringing TVs in closer parity to computer monitors for this important spec. It could also remove, or at least reduce, two serious visual artifacts: jutter and image tearing.

With the prices of big TVs far, far less than the price of big computer monitors, many gamers wonder if buying a big TV is the better option. Up until now the answer has been "usually." But once HDMI 2.1 hits, the answer could be "probably." Here's why.

HDMI (and/or 4K TV) issues

Using a TV as a monitor is hardly a new trend. Any gamer looking at the price of 4K TVs and the price of 4K monitors instantly starts to wonder... but can it run Crysis?

I've been using a TV (a projector, actually) as my main gaming display for years, and while Battlefield on a 100-inch screen is epic, it's far from a perfect solution.

There are two main issues. The first is bandwidth. The current (pre-2.1) HDMI specification maxes out at 4,096 by 2,160 pixels (4K resolution) at 60 frames per second. In the earliest days of 4K, there were TVs that could accept 24 frames per second. While 4K/60 is certainly "fine," there are plenty of gamers for whom "fine" is synonymous with "bad."

Part of the issue is motion blur and input lag. LCD and the current implementations of OLED have noticeable motion blur at 60 Hz. They combat this with higher framerates. Some 1080p TVs went up to 240 Hz. Current 4K TVs max out at 120 Hz. With regular video, the TV internally creates the additional frames needed to display 24- (Blu-ray) and 60- (sports) fps content on 120/240 Hz TVs. Though this can lead to the soap opera effect , motion blur is greatly reduced.

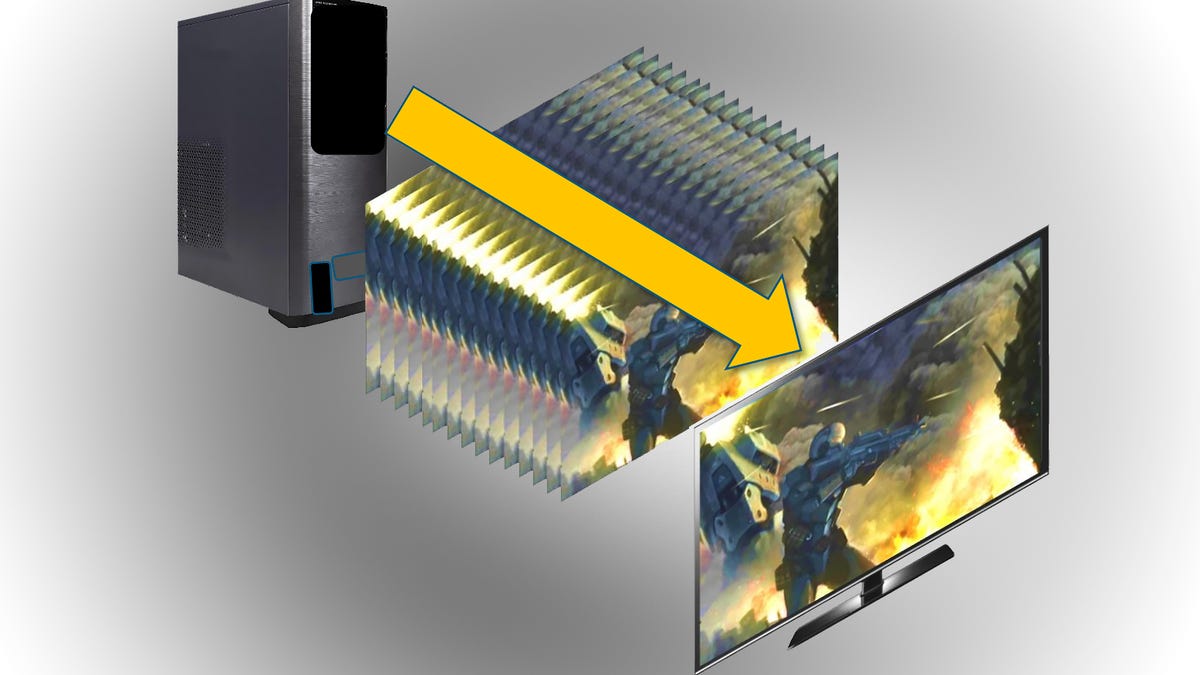

A representation of screen tearing due to a mismatch between what framerate the video card can render, and what the display needs. (Created from a photo on my Instagram)

That video processing, though, takes time. This leads to input lag, which is a gap between when you press a button on your controller, and when that action happens on screen. Worst-case scenario, in the time it takes for you to see something on screen (an enemy, say), react (press a button) and for that action to show on screen, the enemy has already shot you. Slightly annoying when playing on your own, infuriating when playing online. To put this in perspective, with Battlefield 1, I went from the bottom 10 percent of every round when using a projector with 120-millisecond input lag, to the top 10 percent by just switching to a 33 ms lag projector. It makes a big difference.

The solution, of course, is let the PC create all the frames the TV needs. Best of both worlds: minimize motion blur, minimize input lag.

That wasn't possible, but it will be.

Enter 2.1

HDMI 2.1 greatly expands the bandwidth possible over HDMI. With it, there's an increase in frame rates. It will be possible, in theory, to send a 4K TV a 120 Hz (i.e. 120 fps) signal. Whether your PC is capable of that resolution and frame rate is another question, but I'm sure eventually most video cards will be able to.

Will all TVs support this higher frame rate, especially when only high-end PCs will be able to send it? At the start, probably not. Just because the cable and connection can handle it, doesn't mean the rest of the TV can. PC gaming is and always will be a niche market. The cost of getting all the various processing bits to work with 4K/120 is greater than $0, therefore manufacturers are going to be slow to implement it. But because it's in the spec, and it is possible to do, some TV company is bound to do it. When I reached out to several TV manufacturers regarding what they intend to do for HDMI 2.1, they responded "We're excited about all new advances for our customers..." Blah blah blah. So we'll see.

It's more likely we'll see Game Mode VRR.

Game Mode VRR

With one of the cooler-named TV features in recent memory, Game Mode VRR is potentially as cool as its name. It's also a bit confusing, especially since "VR" is generally understood to stand for virtual reality. This isn't that.

VRR stands for "Variable Refresh Rate." Today most TVs only do one refresh rate. Usually 60, often 120 and rarely 240.

Let's concentrate on the most common: 60 Hz. This means there are 60 images shown on screen every second, or 60 fps. For the sake of simplicity, we'll say each image is different. Ideally, the TV wants its source (your computer, for example) to also be 60 fps. With normal video sources, this is easy. With your computer, it's actually a bit more challenging.

Right now, your video card takes two different steps to create an image. Well, a LOT of different steps, but let's simplify this a bit so we can discuss it in less than 10,000 words. The main and first step is to create the visual parts of the game world, as in all the polygons and textures of castles, buggies, space ships, etc. The stuff you think of when you imagine what a video card is doing (i.e. "making the game").

Separate from that, is the actual video image sent to your TV. The two processes are intertwined, but of paramount importance is the TV getting 60 frames every second. If it doesn't get 60 fps, it likely won't show anything.

Normally everything goes smoothly. But if the game creation part of the video card hasn't rendered the gameworld completely in that 60th of a second, the video output part of the card either sends a duplicate frame, or a partially completed frame with remnants of the previous frame. This can result in the image having visible tears or the motion stuttering. The video above shows examples of both.

VRR helps this by letting the video card and the TV work together in determining the framerate. So instead of a rigid speed limit, it's like you and the cops determining together what the best speed is for you, your car and the road.

Nvidia's G-Sync, which is where these images come from, works similar to how Game Mode VRR will work. Ideally (1) the video card can create an image in enough time for the TV to refresh 60 times each second. Sometimes it takes longer to render the scene (2), so the TV gets sent a duplicate of the previous frame. The image stutters and your mouse movements become inaccurate. You can disable v-sync in your video card settings, so there's less or no jutter, but the image tears (3). Game Mode VRR, like G-Sync and ATI's Freesync, lets the display and video card work together to figure out the best framerate (4).

The result is fewer video artifacts like tearing and stuttering with pans (though the latter could still be because your video card isn't fast enough for the resolution).

An additional benefit is a reduction in input lag, or how long it takes for you to press a button and that action appearing on screen. Whether you're playing something like "Guitar Hero Live" or a first-person shooter, input lag can mess with whatever precision you have in a game. Best case, the TV spends less time waiting for images, so they appear on screen faster.

Aiding this is a feature not widely discussed, but part of the HDMI 2.1 spec: "Fast as Possible V-active" (FVA for short, though that name might change when the spec is finalized). Essentially this allows the video card to increase the clock rate on the HDMI connection to transmit the data for each frame as fast as possible when it's done rendering. Say that in one breath. To put it more simply, if each video frame is like a train, FVA has the same number of trains but allows each train to run faster on the same tracks.

I tried to get HDMI Forum to give me a percentage of how much faster VRR and FVA might be, but they can't, of course, because it's going to vary quite a bit depending on the hardware. They did say that, implemented correctly, there'd be a "perceivable improvement" over a similar display without VRR/FVA. There will still be display- and card-specific processing that can't be avoided, but the time it takes to transmit all that data between them should be improved. This is a good thing, for sure.

The G-Sync monitor on the right avoids screen tearing in Dying Light.

I think the most likely use of this will be for TV companies that already want to market to gamers (think a better "game mode"), allowing for even lower input lag times.

If this sounds familiar, it's because it's not exactly new. Both Nvidia with G-Sync, and ATI with FreeSync have had this for several years. Those were only for computer monitors, though, and in the case of G-Sync, proprietary. BlurBusters has a great tech dive into G-sync if you want to know even more.

To lightspeed and no further!

HDMI 2.1 will bring with it the possibility of even higher resolutions, like 8K and even 10K. TVs that support those resolutions are at least a decade away from stores. Computer monitors with those resolutions? That's more likely. Computer monitors almost always have the DisplayPort connector though, which has similar specs and features as HDMI 2.1. Enough similarity that any conversation regarding the two of them invites, shall we say, "heated and lively debate." Discussions of the pros and cons of 8K resolution at 120 fps is merely academic at this point.

But Game Mode VRR and the framerate increases with HDMI 2.1 are potentially very interesting. Sure running most games at 4K/120 seems laughably unrealistic at this point for most gamers, but it wasn't too long ago that was said of 1080p/60 gaming too. Since the spec isn't finalized, we don't know what the min and max framerates will be for GM VRR, though there certainly will be limits on both ends (probably 60Hz and 120 Hz, but we shall see).

And if you don't think there's much need for 4K/120 gaming, that is exactly what the "other" VR , aka virtual reality, needs to move to the next level of realism. There's never enough resolution or framerate for VR.

Lastly, though the current consoles, namely PlayStation 4 and Xbox One, aren't nearly powerful enough to do 4K/120, all the tech advances possible with VRR and FVA are possible on consoles as well.

It will be a while before we see TVs or video cards that supports 2.1, but both are certainly on the horizon and that's pretty cool.

Got a question for Geoff? First, check out all the other articles he's written on topics such as why all HDMI cables are the same, LED LCD vs. OLED, why 4K TVs aren't worth it and more.

Still have a question? Tweet at him @TechWriterGeoff then check out his travel photography on Instagram. He also thinks you should check out his sci-fi novel and its sequel.