Inventing the future of digital media at MIT (photos)

The famed MIT Media Lab is a hotbed of ideas on how life is becoming more and more digital and how people will interact with their digitized surroundings in new ways.

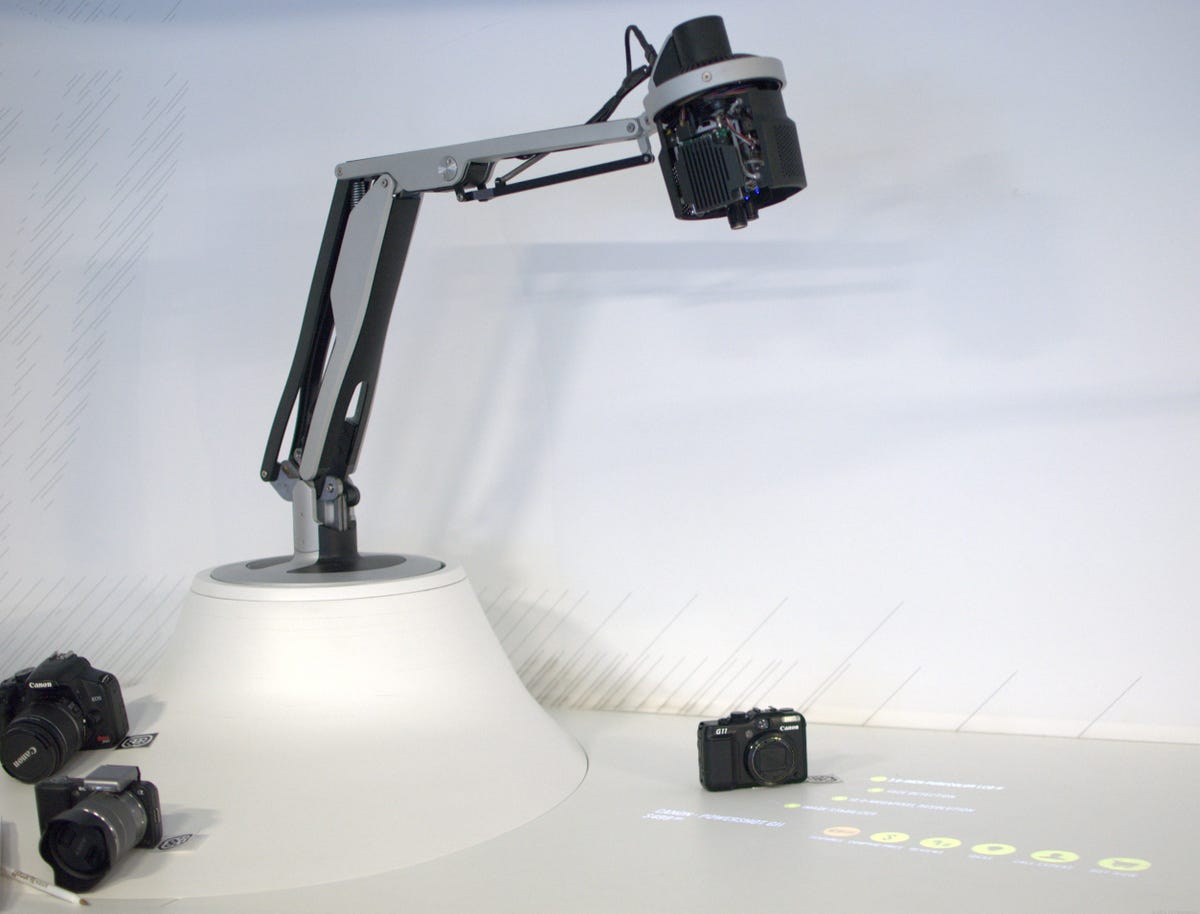

Lamp computer

What's inside the MIT Media Lab? This slideshow will offer you a sampling of the wide array of projects being pursued, from robotics to new man-machine interfaces.

The Augmented Product Counter project, seen here, is an example of how different computer interfaces open up new possibilities for augmented reality applications. Inside the lamp is a computer and camera that detects a person's motions, allowing a person to tap a table to pull up menus and photos. There is a retail application of the technology where a person can see information about different products. Because there's a camera, when a person picks up an object, the computer can display additional information, such as reviews or product comparisons.

Shape shifting

The Recompose project in the Tangible Media group at the MIT Media Lab is looking at how digital technologies allow people to manipulate objects in completely different ways. A person's motions are translated by a depth camera which directs how the squares on the surface below move. It's one demonstration of how different gestural interfaces are from traditional computer interfaces and from virtual reality.

Collaborative 3D

(T)ether is another experiment in gestural interfaces. Here two people can use tablets to collaborate and manipulate three-dimensional objects, which is now traditionally done by sharing CAD files on a computer. The demonstration requires a camera and special gloves to track motion.

WristQue sensor

WristQue is a prototype wristband sensor designed to let people interact with smart buildings and potentially other smart objects. With it, people can customize heating and cooling settings and control lights by moving their arms. It also includes a radio to track where a person is in a building and automatically change temperature settings.

Gestural interface

Yet another demonstration of gestural interfaces is Amphorm, which anticipates a day when scientists will be able to make objects with shape-shifting materials. A person uses gestures and an image of the vase on a tablet computer to change the shape of the vase. The research showed that, compared to a keyboard and mouse, people have a far more emotional connection to objects when they manipulate them through gestures, said MIT researcher David Lakatos.

Customizable guitar

MIT student Nan-wei Gong shows off her creation, a guitar with customizable controllers. The material she used is low-cost printed circuitry, the copper-colored material on the surface of the guitar. The covering lets people press the different buttons to control the sound effects for the guitar. It's an alternative interface to using a computer or a dedicated effects device and it gives musicians the ability to create "outfits" for different sound effects.

Smart food

Here is an example of how our existing digital devices, namely smartphones, can be used to augment our physical surroundings. Here people can scan a code with their smartphone to find out more about products they are shopping for. In another project, people use a smartphone to superimpose images on a thermostat to control the settings.

Seeing, autonomous vehicles

What happens when there are autonomous, self-driving vehicles on the road? How will they sense and communicate with pedestrians? The Autonomous Electric Vehicle Interaction Testing Array (AEVITA) project equips autonomous vehicles with numerous sensors, including "eyes" that react to its surroundings and convey its state to people nearby.

Huggable robot

The Huggable Project is designing stuffed animal robots for children recovering from medical treatment. If a child is infectious or has trouble communicating with caregivers, the child can interact with the robot, which has sensors to collect information about the patient. For instance, a child can squeeze the hand to tell the robot if it feels pain, which can be measured for doctors. The device uses the forward camera of a smartphone, fitted behind the eyes, to see what a child's doing.

DragonBot

DragonBot is a toy robot platform that uses a smartphone as the toy's face and its Internet connection to improve its performance. This application of the platform is to teach children how to speak another language where both the robot and the child use the tablet computer. When the child removes the smartphone from the robot, a picture of the toy is displayed so the child can keep playing with it.

Robo car

What about a robot companion while you're driving? The Aida project has designed a robot, seen on the dashboard, that's designed to act as a companion to the driver. It will be voice-activated and have to track a driver's actions to only move at appropriate times so as not to be distracting.

Collaborative space

Here researchers show the movements of people in front of two separate computers on a single screen. It uses a Kinect console to track people's movements, draw, and let people interact virtually with people on the same digital space.

Moveable wall

What if you could move your walls and customize your house like you customize your smartphone? The CityHome project developed a software system that involves people in the design of their home, which can be changed with this moveable wall.

Glasses-free 3D display

This early prototype shows how using multiple layers of plastic can create the effect of a three-dimensional image without the need for 3D glasses. The research group has built a full-scale prototype with multiple layers in the lab, too.