MindMeld voice and video app instantly anticipates your needs

New app from Expect Labs is designed to listen to what is discussed in a conversation and instantly deliver relevant information based on what people talk about.

SAN FRANCISCO--A new iPad app announced today aims to give users instant contextual information based on nothing more than what's being talked about during a voice conversation.

Launched out of stealth today, the eight-person San Francisco startup Expect Labs unveiled MindMeld, an app designed to interpret what people using it are discussing and instantly deliver sharable useful information about it.

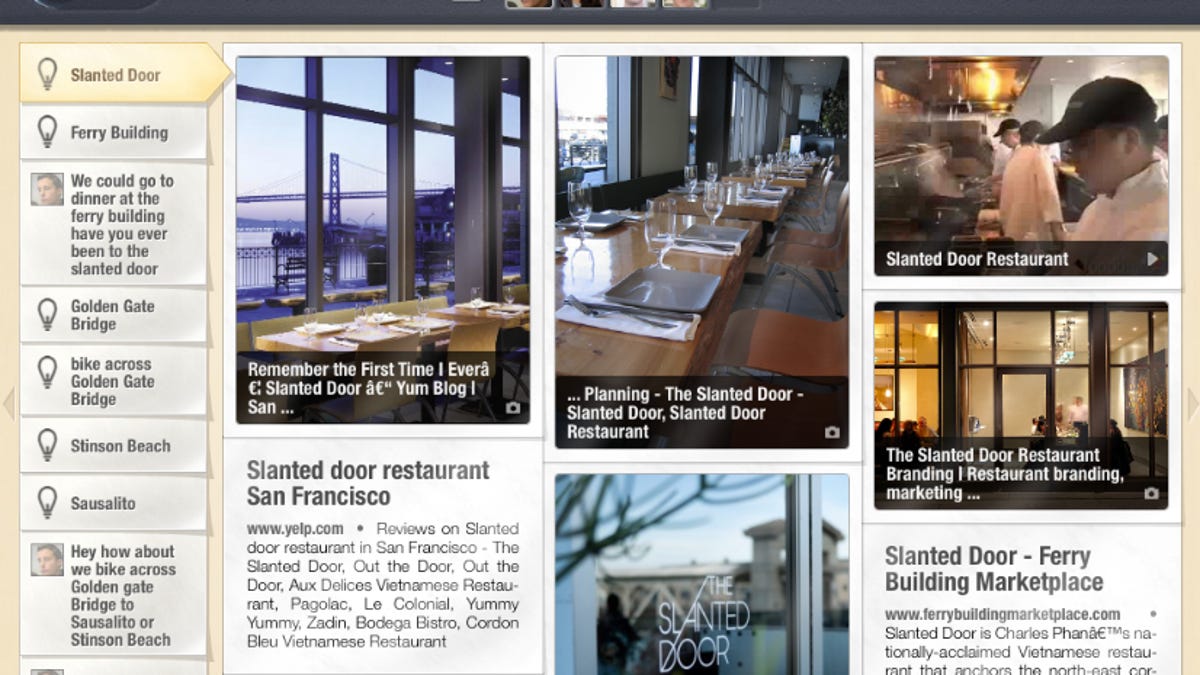

For example, explained Expect Labs CEO Tim Tuttle -- who previously built and sold video search engine Truveo to AOL -- if several people using MindMeld were talking about going out to a Blue Bottle cafe in San Francisco, the app would quickly pop up a map of the city with the location highlighted, as well as several other relevant pieces of content about the coffee shop.

Similarly, (see video below) if people using the app were talking about going hiking in Muir Woods National Monument near San Francisco, several windows of data about the attraction would pop up, including a map, a brochure, hiking suggestions, and more.

MindMeld, which Expect Labs unveiled at TechCrunch Disrupt today, works by combining industry standard voice recognition technologies -- which Expect Labs is licensing -- with the company's own search system in order to produce the most relevant results as fast as possible.

Users must all be logged into Facebook, Tuttle explained, because MindMeld proactively looks for data about who's on a call in order to match information to some of their personal preferences. The app actively monitors the last 10 minutes of a voice -- or video -- conversation, following conversational signals about the users and the activity in their social graphs, and if someone on the call wants to get information about something that was just discussed, they tap on an on-screen button and MindMeld begins sourcing up relevant content about what was mentioned.

The idea here, Tuttle said, is that MindMeld is at the forefront of the kind of technology that will surround us everywhere in a few years and that will be always on and constantly paying attention in order to serve up information when we want it, or possibly even before we know we want it.

For now, however, it's still necessary for users to proactively tell MindMeld what they want to know. But the app is designed so that when that data is produced, it can be easily shared with everyone on a call. MindMeld was specifically built for a touch-screen device like the

Tuttle said MindMeld -- which is just the first app using technology that Expect Labs has been building over the last couple years -- learns as people use it, getting better at delivering relevant results over time.

At some point next year, Expect Labs will release a set of APIs, hoping that developers will create all new applications that will integrate the company's technology.