Google makes it easier to record (and share) AR data on your phone

ARCore tools let you create depth maps without lidar on Android phones, and the tools may be extending to more apps.

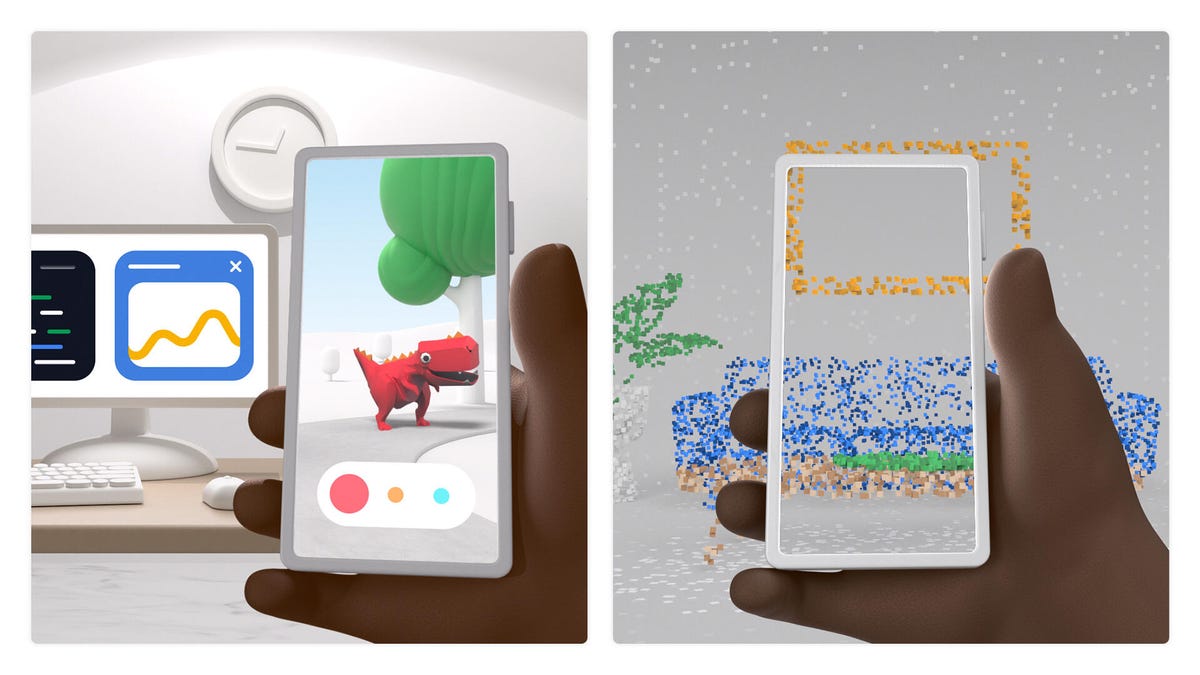

Google's new AR tools work without lidar, but with similar depth-mapping effects.

At Google's virtual Google I/O developer conference this year, news on augmented reality hasn't necessarily taken the forefront. But the company's updates to its ARCore tools on Android look like they're adding some of the same world-scanning things that Apple's lidar does -- but without lidar. And, in ways that could layer that AR into prerecorded videos.

According to Google's ARCore 1.24 announcement, the new tools can be used to share depth maps captured by a phone with ARCore: In other words, the rough locations of objects in a room in a "3D mesh." Based on partner apps Google announced in the blog post, applications could be used for 3D scanning, or AR that places objects, like furniture, realistically in a room full of things.

The more interesting (and mind-bending) part comes with Google's ability to record and share depth map data, which the company envisions as a way to place AR into things like prerecorded videos. If someone already had depth data for a certain room, then a video of that room could end up using that data to place objects into the video as effects, perhaps for social video (TikTok was listed as an example) or anywhere else.

layering AR things on a depth-scanned chair using ARCore, without lidar.

Partner apps that are already experimenting with tapping into these features, according to Google, are AR Doodads (an app that layers complex Rube Goldberg things into a room), LifeAR (a video calling app that uses AR to project avatars), and VoxPlop (which layers 3D effects into videos after the fact).

Google first announced its depth-sensing AR features a year ago, but it's the opened-up sharing of depth data between apps that's new now. "These updates leverage depth from motion, making them available on hundreds of millions of Android devices without relying on specialized sensors," Google's post explains. "Although depth sensors such as time-of-flight (ToF) sensors are not required, having them will further improve the quality of your experiences."

While Apple currently leans on physical sensors (lidar) for 3D meshes of rooms in AR, some companies like Niantic are already doing similar things without lidar. Google now looks like it's flexing more of those tools for Android, too.