Google's New AI Text-to-Video Tool Is Fun to Look At. But What Next?

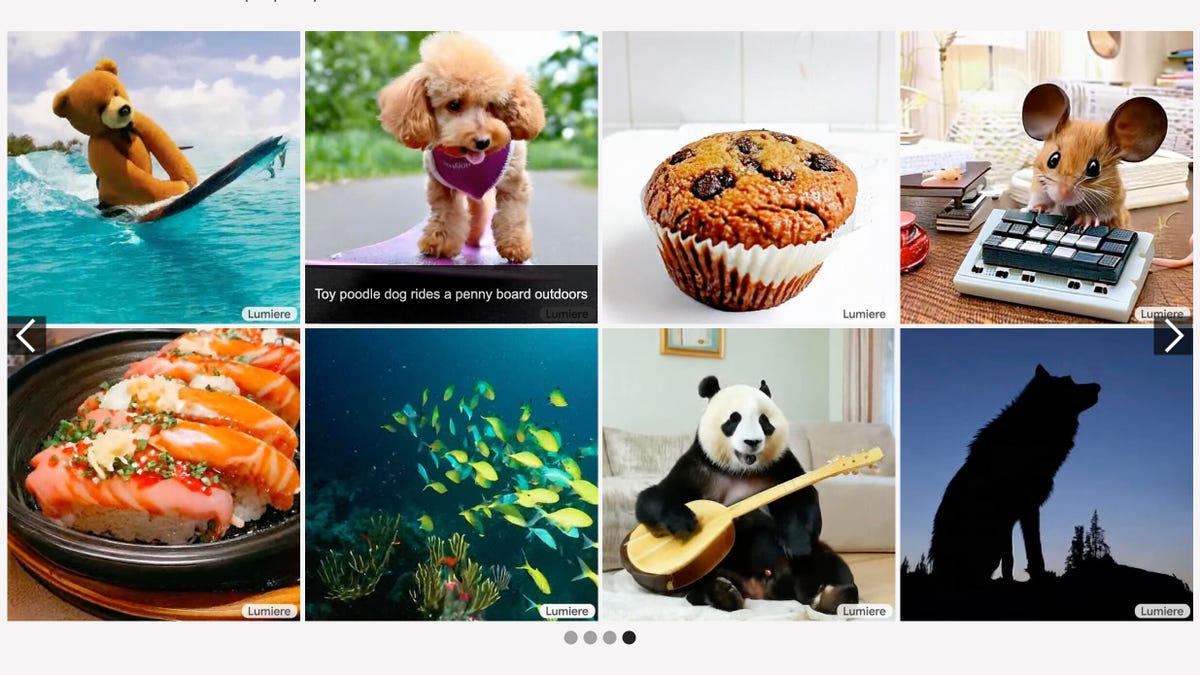

It's called Lumiere, and it can whip up moving images like "fluffy baby sloth with an orange knitted hat trying to figure out a laptop." Not too shabby.

Google has teased an AI-based video generation tool, but it's not clear when — or if — anyone outside the search giant will be able to kick the tires. It's certainly fun to look at, though.

On Wednesday, Google's Research arm released a video highlighting this new text-to-video model, which is called Lumiere.

In a LinkedIn post, team leader Inbar Mosseri said the tool "generates coherent, high-quality videos using simple text prompts" that New Atlas says run up to five seconds. Sample inputs include, "A fluffy baby sloth with an orange knitted hat trying to figure out a laptop" and "An escaped panda eating popcorn in the park."

In the year or so that generative AI has been the hottest technology going, much of the attention has been focused on tools like ChatGPT that produce text answers to prompts, or those like Dall-E that create still images. Video creation from text prompts is arguably the next frontier, so if Lumiere really can "demonstrate state-of-the-art text-to-video generation results" as Google says, we may already be evolving beyond the "grotesque abominations" of the AI-generated images of 2023.

As the video illustrates, Lumiere's capabilities include text-to-video and image-to-video generation, as well as stylized generation — that is, using an image to create videos in a similar style. Other tricks include the ability to fill in any missing visuals within a video clip.

That includes the ability to animate famous paintings, like Van Gogh's Starry Night ("A timelapse oil painting of a starry night with clouds moving") or Da Vinci's Mona Lisa ("A woman looking tired and yawning"). While the Starry Night example works almost flawlessly, Mona Lisa looks far more like she's laughing than yawning.

And while many of the animals — such as "a muskox grazing on beautiful wildflowers" and "a happy elephant wearing a birthday hat walking under the sea" — look realistic, there's something off about some of the dogs. Both a toy poodle riding a skateboard and a golden retriever puppy running in the park are close to passing as real, but their faces — and perhaps their eyes specifically—betray the fact that they're CGI.

Nevertheless, the video editing tools hold a lot of promise. Using a source video and prompts like "made of colorful toy bricks" or "made of flowers," users can purportedly change the style of the subject completely. And with inputs like "wearing a bathrobe," "wearing a party hat" and "wearing rain boots" to add said items to an image of, say, a baby chick, Lumiere may very well make fiddling with videos more accessible to those of us who didn't major in graphic design.

Though the assets shared so far certainly make Lumiere seem like it's user-friendly, the description of how it works isn't. (Google didn't respond to a request for additional comment.)

A project page describes Lumiere as "a space-time diffusion model," which sounds like something Doc Brown was working on in Back to the Future. Google Research said this means the text-to-image model learns to generate a video by processing it in multiple space-time scales, which helps create videos that "portray realistic, diverse and coherent motion."

According to Google, this is superior to existing models, which "synthesize distant keyframes followed by temporal super-resolution."

Jason Alan Snyder, global chief technology officer at ad agency Momentum Worldwide, explained it this way: "It's like the difference between watching a puppet show and experiencing a ballet at Lincoln Center."

That's because Lumiere "doesn't just focus on snapshots, it crafts smooth, flowing motion for every frame," he added.

In other words, if you think about the traditional method of making a movie, you'd have to build key scenes and fill in the gaps later.

"Lumiere is different. It sees the whole movie in its mind, understanding how characters move, objects interact and everything changes over time," Snyder said. "It's like drawing the entire flip book simultaneously, ensuring every page flows perfectly."

So this "space-time thinking" helps Lumiere create videos that feel real, which, he added, means no more jumpy transitions or robotic movements. (Except maybe for puppy eyes.)

Time will tell.

In the meantime, as fans of Beauty and Beast will know, Lumiere is French for "light."

Editors' note: CNET is using an AI engine to help create some stories. For more, see this post.