Google working to fix AI bias problems

Just because artificial intelligence training data might show that doctors are often male, doesn't mean machine learning should assume that's the case.

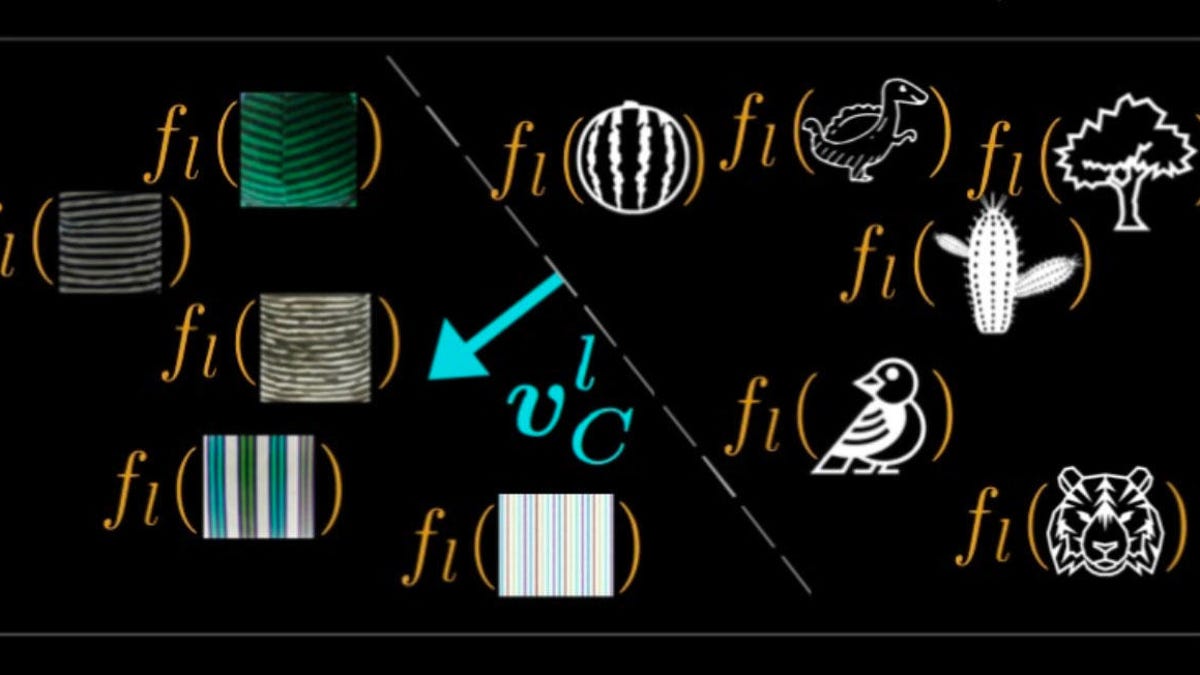

Google researchers are working on technology called concept activation vectors that make it easier to see the choices an AI algorithm is making, revealing higher-level human-friendly terms.

Google is trying to keep AI bias at bay.

At the company's Google I/O conference Tuesday, Chief Executive Sundar Pichai described research to gain insight into how Google's artificial intelligence algorithms work and make sure they don't "reinforce bias that exists in the world."

Specifically, he described a technology called TCAV (testing with concept activation vectors) that's designed to do things like not assume a doctor is male even if AI training data indicates that's more likely.

Google technology called TCAV is designed to keep AI from reinforcing societal biases.

"It's not enough to know that an AI model works. We have to know how it works," Pichai said. "Bias has been a concern in science long before machine learning came long. The stakes are clearly higher in AI."

Concept activation vectors make it easier to see the choices an AI algorithm is making, revealing higher-level human-friendly terms, not just low-level characteristics like pixel-level structures in photos.

In one research paper about concept activation vectors, Google researchers showed the technology could identify medical concepts that were relevant to predicting an eye problem called diabetic retinopathy. And it could reveal what's going on inside the mind of the AI, so to speak, so humans could oversee it better. "TCAV may be useful for helping experts interpret and fix model errors when they disagree with model predictions," the researchers concluded.

Google I/O 2019

Originally published May 7, 11 a.m. PT.

Update, 5:57 p.m.: Adds further detail about TCAV research.