Google's AI chips now can work together for faster learning

TPUs linked into "pods" let Google Cloud customers train their artificial intelligence systems faster.

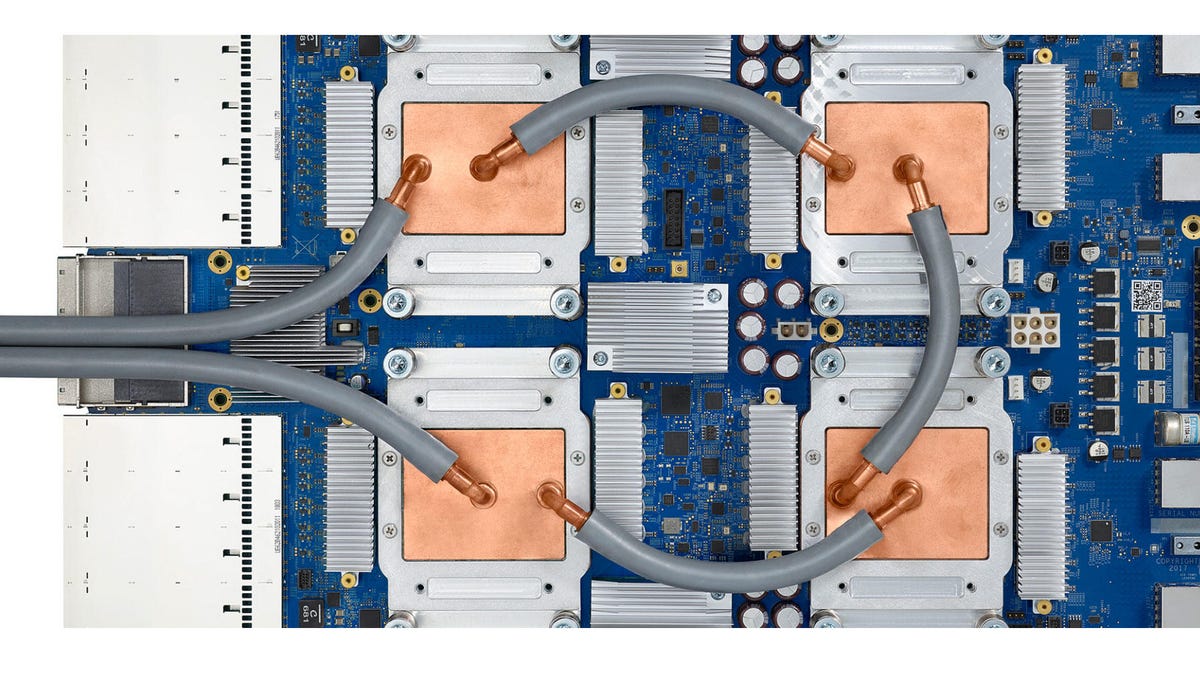

Google's TPU v3 chips -- tensor processing unit -- are liquid cooled for maximum performance.

If you're feeling like Google's data centers are holding back your AI abilities, the company now lets you gang together lots of its tensor processing unit (TPU) chips for better performance.

The Google Cloud service now offers TPUs linked together into "pods," the company announced at its Google I/O conference Tuesday. The resulting speedup is chiefly of interest to the "training" phase, when artificial intelligence systems learn how to spot patterns in real-world data.

The larger systems speed up training so customers can either build more-sophisticated models or update models more frequently with new training data.

As the processor progress charted by Moore's Law has faltered, many companies are scrambling to build AI chips or augment their existing chips with AI abilities. That includes tech giants such as Google and Apple , incumbent chip powers trying to stay current like Nvidia, Intel and Qualcomm, startups like Wave Computing and Flex Logix, and other players like Tesla , which has begun building Model S , X and 3 cars with its own AI processor designed for full self-driving abilities.

Google I/O 2019

Google uses AI extensively and touted several new uses for the technology at Google I/O . However, like Apple and Facebook, it's trying to push more AI processing off its servers and into devices like phones and home hubs . That relieves its own servers while protecting privacy.

AI tasks like recognizing faces and speech can happen in those small devices. But training them requires massive computing systems found at companies like Google and its bigger cloud-computing rivals, Amazon and Microsoft .