DeepMind's AI can now crush almost every human player in StarCraft 2

AlphaStar is practically unstoppable.

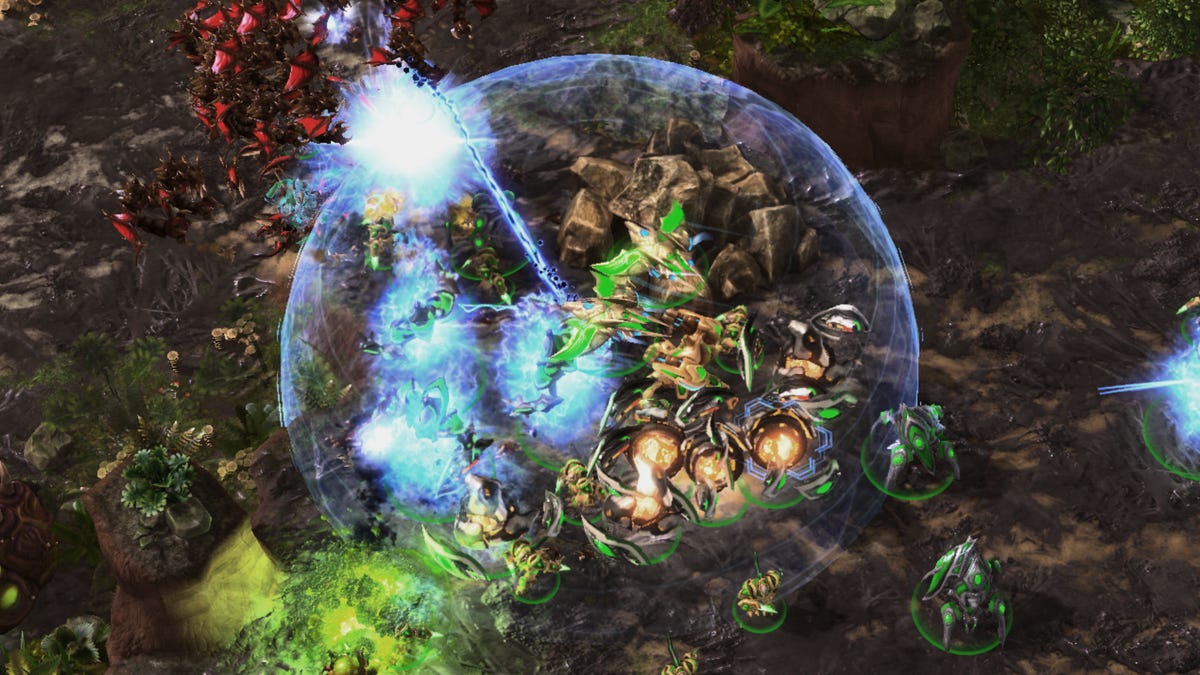

AlphaStar as the StarCraft race Protoss just cleaning house.

DeepMind's artificial intelligence platforms have become legendary for their ability to master complex games like chess, shogi and Go, crushing our puny human brains with advanced machine learning techniques. Earlier this year a new version of the AI built for real-time strategy game StarCraft II, dubbed AlphaStar, was unveiled and carried on DeepMind's tradition of putting humans to shame, trampling some of the top human StarCraft II players in the world.

On Wednesday, the DeepMind team published a new study of AlphaStar in the journal Nature, detailing just how far AlphaStar has come. And folks, it's bad news for any up-and-coming StarCraft II stars: The AI is now classed as a Grandmaster, which means it can beat 99.8% of all human players.

Why would researchers build an AI for a niche video game title and what can it teach us about artificial intelligence and machine learning? Well, let's unpack that.

StarCraft II is a real-time strategy game where players take control of one of three teams (or "races", in the games parlance). Each race has unique properties and players must control hundreds of units in their vast armies across a huge map while they manage resources to build those units, attack their enemies and defend their bases. There are countless strategies and counter strategies which help human players, at the top levels of play, to win. It's like an incredibly complicated game of rock-paper-scissors.

The game's complexity and depth makes it an important challenge for AI. In a game of StarCraft II, players can't see what their opponent is doing like they might in chess or Go. In addition, those games give you time to pause and consider strategy -- but StarCraft II is "real-time", so once the game starts, only victory or defeat can stop the clock.

DeepMind put AlphaStar through a fairly simple training regime. First, it watched almost a million replays of the top human players to learn strategies and imitate their actions. It is then pitted against other DeepMind AI to learn which strategies work best in a technique known as "reinforcement learning". This trains the AI by showing it that winning is good and losing is bad.

Not being human, AlphaStar has a number of advantages over Homo sapiens. For one, it doesn't have an annoying physical body that limits its capabilities and constrains how fast it can react. To level the playing field, the DeepMind team deliberately hindered AlphaStar, forcing delays in computation time and latency and limiting how many actions it can perform every minute.

Even "humanizing" the AI in this way, AlphaStar's most advanced version (AlphaStar Final), still rolled opponents it encountered online.

"I've found AlphaStar's gameplay incredibly impressive," said Dario "TLO" Wünsch, a German StarCraft II pro, in a statement. "The system is very skilled at assessing its strategic position, and knows exactly when to engage or disengage with its opponent."

Destroying human opposition in a video game sounds like it leads to a potentially scary doomsday scenario, but the DeepMind team are developing AI like AlphaStar to improve real-world systems. For researchers, mastering a complex real-time strategy game like StarCraft II is one of the first steps in generating better, safer AI for applications like self-driving cars and robotics.