Twitter: Racist tweets after Euros final didn't rely on anonymity

The torrent of abuse aimed at England's footballers last month came almost exclusively from people who weren't attempting to hide their identities.

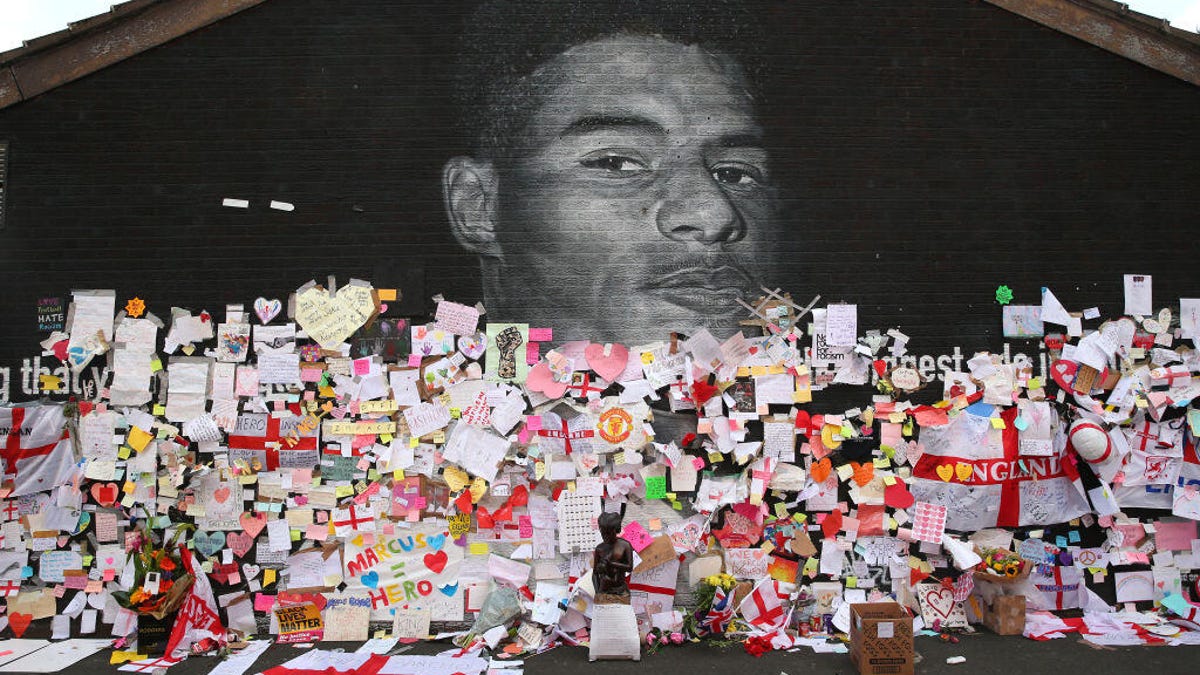

A mural of footballer Marcus Rashford is covered in messages of support by fans after it was defaced by racist vandals.

If you've never been a victim of online abuse, it would be easy to assume that perpetrators of such abuse hide behind anonymous avatars and usernames that obscure their real identities. But that's not the case.

Twitter revealed in a blog post Tuesday that when England's footballers were targeted by racist abuse last month after they lost the Euro Cup final, 99% of the accounts it suspended were not anonymous.

The torrent of racist abuse aimed at three Black members of the England squad appeared on Twitter and Instagram in the hours following the match. It led commentators including Piers Morgan to demand that social media platforms prevent people from creating anonymous accounts in order to discourage them from posting racist comments.

The idea that anonymity is a primary factor in enabling perpetrators of abuse isn't new, and in the UK there's even been debate about whether to include banning anonymous online accounts in the upcoming Online Safety Bill. But the argument for social media sites to perform mandatory ID checks rests on the fallacy that if people can be held accountable for their actions, they won't be racist.

The evidence Twitter provided on Tuesday validates what people of color have already been saying: that people will be racist regardless of whether or not an anonymous account shields them from the consequences. "Our data suggests that ID verification would have been unlikely to prevent the abuse from happening -- as the accounts we suspended themselves were not anonymous," said the company in a blog post.

Instagram didn't immediately respond to a request for data on the accounts or comments it deleted for directing abuse at England's footballers.

Also included in Twitter's data was evidence that while abuse came from all over the world, the UK was by far the biggest country of origin for the abusive tweets. It also added that the majority of discussion of British football on the platform didn't involve racist behavior, and that the word "proud" was tweeted more frequently on the day following the final than any other day this year.

For Twitter and other social media giants, putting tools in place to prevent racist abuse is an ongoing challenge. On Tuesday, Twitter said it would soon be trialing a new product feature that temporarily autoblocks accounts using harmful language. It's also going to continue rolling out reply prompts, which encourage people to rethink what they're tweeting if it looks like their language might be harmful. In more than a third of cases, this caused people to rewrite their tweet or not send it at all, according to the company.

"As long as racism exists offline, we will continue to see people try and bring these views online -- it is a scourge technology cannot solve alone," said Twitter in the blog post. "Everyone has a role to play -- including the government and the football authorities -- and we will continue to call for a collective approach to combat this deep societal issue."