Top500 supercomputer rankings await speed-test shakeup

The latest list of most-powerful computers is a bit of same old, same old. But soon, it won't be so easy to get ahead on the Top500 list by plugging in special-purpose accelerator cards.

Monday's update to the list of the 500 fastest supercomputers in the world showed only a single new system in the top 10. But bigger changes are afoot, because list organizers have released an entirely new speed test to gauge the systems.

For two decades, a group of academic researchers have used the Linpack benchmark to gauge performance on the Top500 list. On Monday, the Top500 organizers released a new test called High Performance Conjugate Gradient (HPCG) that's designed to better predict a supercomputer's real-world utility.

One change coming with the new benchmark: It's likely to throw some cold water on a recent trend, the addition of special-purpose "accelerator" processors such as graphics chips that are good for only a subset of supercomputing chores.

The HPCG test has entered beta testing and will be adjusted in the coming years, said Jack Dongarra, a University of Tennessee researcher, the author of Linpack, and one of the organizers of the Top500 list. Linpack won't be removed, but it'll have to share the limelight with HPCG, he said.

"It will be a while before we have enough data to populate the Top500 list, but that is the intention," Dongarra said. "Keep in mind that the Linpack Benchmark took many years before it was widely accepted. We hope this new benchmark reflects modern applications better than Linpack."

But for now, Linpack rules the roost, and by its measurements, the top five computers on the new Top500 list, announced Monday, are unchanged from June's list:

- The Tianhe-2 machine at the National Super Computer Center in Guangzhou, China, with a performance of 33.9 quadrillion flops, or 33.9 petaflops.

- Titan, a Cray machine at Oak Ridge National Laboratory at 17.6 petaflops.

- Sequoia, an IBM machine at Lawrence Livermore National Laboratory, at 17.2 petaflops.

- The K Computer, a Fujitsu machine at the RIKEN Advanced Institute for Computational Science in Japan, at 10.5 petaflops.

- Mira, an IBM machine at Argonne National Laboratory, at 8.5 petaflops.

Next came the only new entrant on the top 10: Piz Daint, a Cray machine at the Swiss National Supercomputing Center.

Years -- the time frame cited by Dongarra -- might sound like a long haul to move to a new benchmark, but it takes time to convince a broad community to change. And HPCG has been under way for a very long time: Dongarra called for a new supercomputing benchmark way back in 2004.

What was wrong with Linpack? It was too limited. It was good for measuring some types of mathematical calculations, but a high Linpack score wouldn't accurately predict good performance on some types of calculations. Among them are computing jobs that need to fetch a lot of data from memory and access memory irregularly, Dongarra and a colleague, Sandia National Laboratories' Michael Heroux, said in a June paper. Linpack also puts too much emphasis on math using "floating point" numbers at the expense of calculations based on integers. Indeed, Linpack's score is measured in floating-point operations per second, or flops.

One specific problem is that "accelerators" -- special-purpose add-in processors including Nvidia and Advanced Micro Devices graphics processing units (GPUs) and Intel Xeon Phi chips -- boost Linpack scores a lot but aren't always practical. As Dongarra and Heroux said:

For example, the Titan system at Oak Ridge National Laboratory has 18,688 nodes, each with a 16-core, 32GB AMD Opteron processor and a 6GB Nvidia K20 GPU. Titan was the top-ranked system in November 2012 using HPL [Linpack]. However, in obtaining the HPL result on Titan, the Opteron processors played only a supporting role in the result. All floating-point computation and all data were resident on the GPUs. In contrast, real applications, when initially ported to Titan, will typically run solely on the CPUs and selectively offload computations to the GPU for acceleration.

Accelerators do help in some circumstances. And meanwhile, researchers are adapting their supercomputing software to take advantage of these accelerators, and software tools are helping them.

The supercomputing work is spilling out into other markets, too. IBM announced Monday a partnership with Nvidia to let that company's graphics chips boost IBM business software running on servers with its Power8 processors.

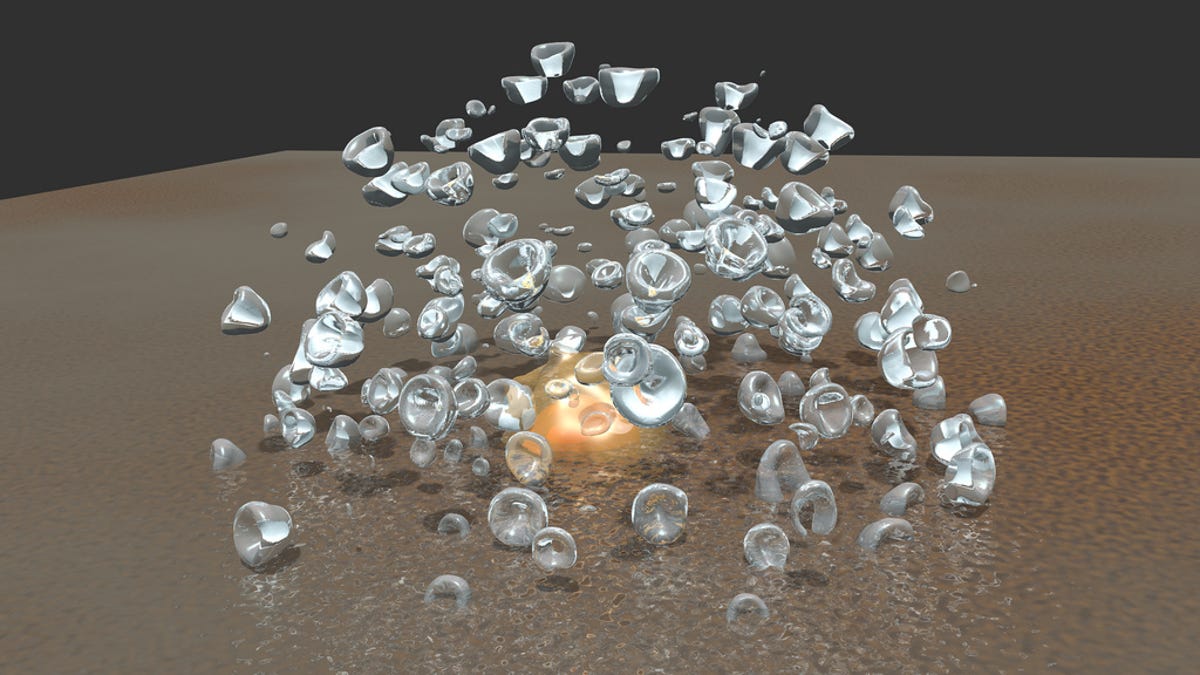

One example of what these machines are good for: understanding the basic behavior of physical materials. Also in conjunction with the supercomputing show, IBM highlighted research results from the Sequoia machine involving a process called cavitation that can occur when shock waves travel through liquids.

With a fluid dynamics simulation, Sequoia modeled a liquid divided into a fine three-dimensional grid of 13 trillion cells. The simulation produced 15,000 bubbles through the simulated cavitation process. The collapse of such bubbles can form damaging new shock waves, a subject of interest in fluid dynamics situations such as submarine propellers and liquids in pipes.

Sequoia is a Blue Gene/Q system from IBM that doesn't use accelerators. But 53 of the systems on the Top500 list do. Nvidia led the pack with 38 of those accelerators; 13 used Intel's Xeon Phi; and two used ATI accelerators.

Though the top machines didn't change much, there was plenty of flux further down the list. Last June, the 500th system had a performance of 96.6 teraflops. In the November list, the bottom system showed a score of 117.8 teraflops. That means 136 new systems arrived on the list.

In addition, the total performance of all the machines on the list continued to increase, rising from 223 petaflops in June to 250 petaflops.

Hewlett-Packard and IBM are the top supercomputer suppliers. HP won the race for the most systems, with 196 to IBM's 164 on the Top500. But IBM has more powerful systems; its machines accounted for a total of 66 petaflops of the list's total performance compared with 18 petaflops for HP.