Supercomputing simulation employs 156,000 Amazon processor cores

To simulate 205,000 molecules as quickly as possible for a USC simulation, Cycle Computing fired up a mammoth amount of Amazon servers around the globe.

Supercomputing, by definition, is never going to be cheap. But a company called Cycle Computing wants to make it more accessible by matching computing jobs with Amazon's mammoth computing infrastructure.

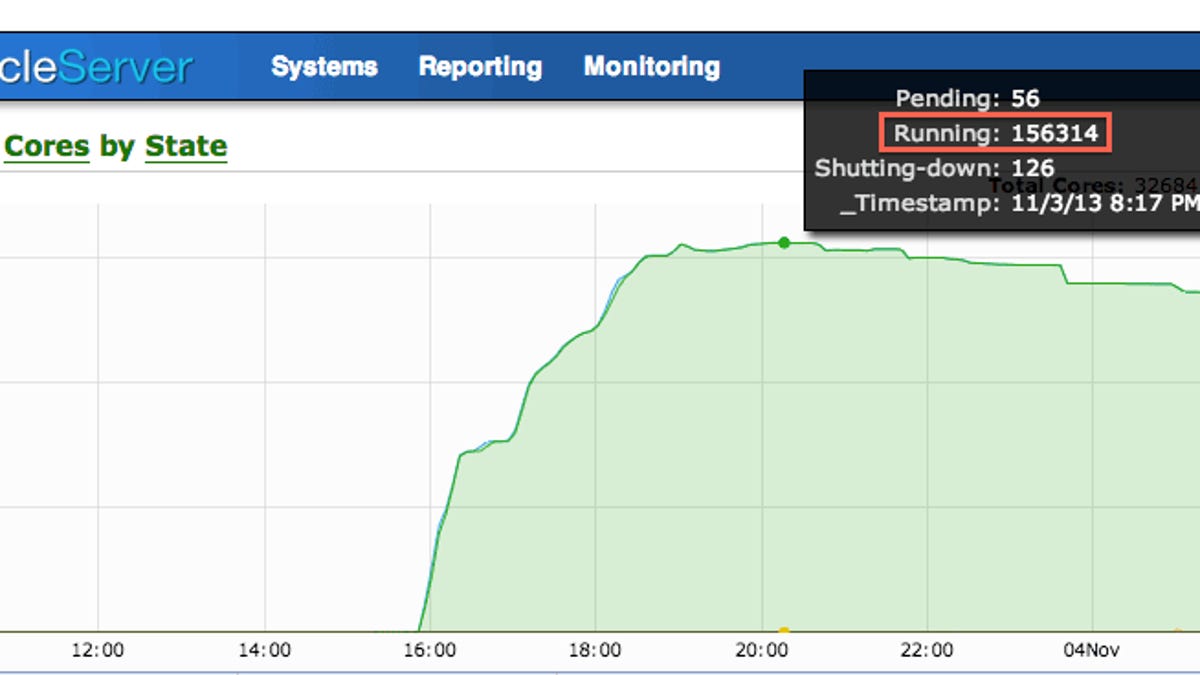

On Tuesday, the company announced a new record use of its technology: a simulation by Mark Thompson of the University of Southern California to see which of 205,000 organic compounds could be used for photovoltaic cells. The simulation drew upon the power of 156,314 processor cores at Amazon Web Services for 18 hours to test the chemicals with Schrodinger Materials Science software for computational chemistry, Cycle Computing said in a blog post.

The job cost $33,000. That might sound expensive, but it's cheap compared to purchasing hardware that sits idle most of the time or that can't perform at a comparable peak performance level. The job would have taken 264 years on a conventional computer, or 10.5 months on a 300-core in-house computing cluster that did nothing else, the company said.

It's an interesting approach for the modern era of computing, in which companies like Amazon, Google, and Microsoft operate mammoth data centers and customers can pay for huge amounts of computing power as they need it. Traditionally, such cloud-computing resources were used to add computing capacity when customers needed something extra to get through peak usage times, like holiday shopping season. However, Cycle Computing's approach is designed with peak computing capacity in mind. It's designed to compress computing jobs into a spike of intense computing.

This particular job was particularly amenable to a quick spike approach. Cycle Computing Chief Executive Jason Stowe told CNET that the task was "pleasantly parallel," meaning that different parts of the job could run independently from other parts and, therefore, at the same time.

Cycle Computing's software handles the dirty work, such as securing Amazon resources, uploading data, dealing with any outages, and making sure financial limits are observed.

To harness the huge amount of computing power, Cycle Computing drew on Amazon services spanning the entire planet.

"In order for our software to compress the time it takes to run the computing as much as possible, we ran across eight regions to get maximum available capacity," Stowe said.

There's nothing stopping Cycle Computing from drawing on other resources, such as Google Compute Engine, but Stowe said its customers are using Amazon Web Services.

"To date, customers have only moved [Amazon Web Services] into production," he said. "In the end, we follow our customers."