Adobe Will Bring Its Firefly AI Tech to Video Creation

The company plans to bring the shiny smarts to its video-editing tools later this year.

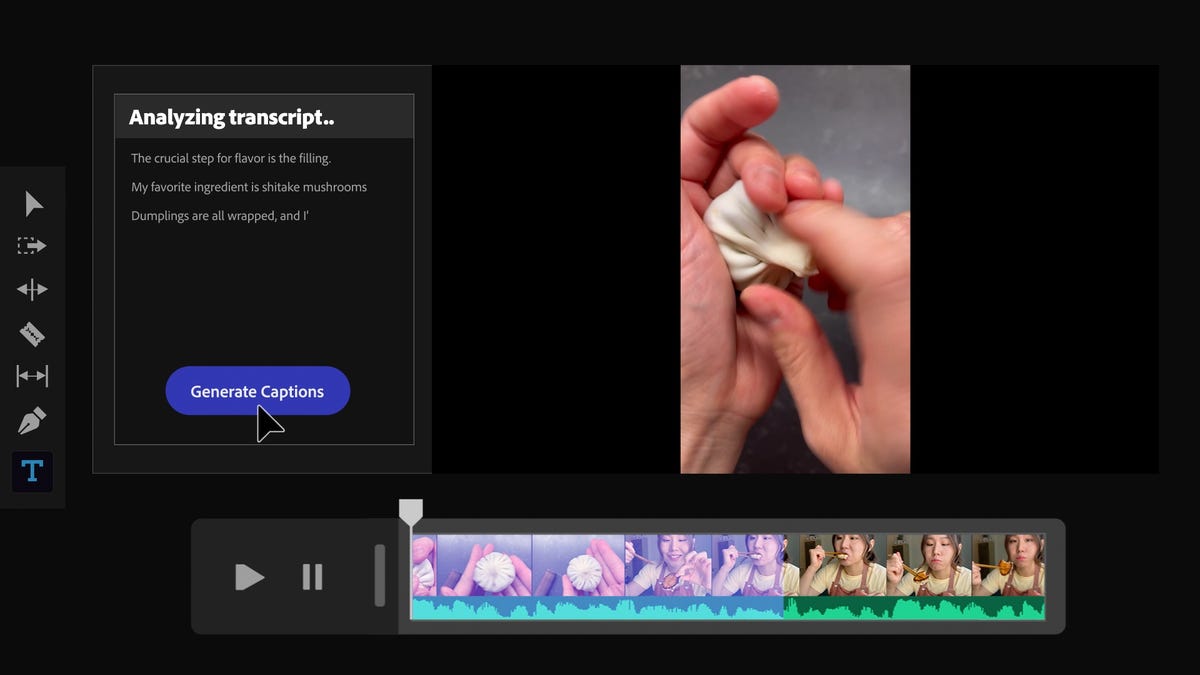

Firefly may be able to create captions from scripts and automatically match them to specific points in a clip. The linkage of specific points in a clip and the text in an automatically generated transcript is what drives the recently announced text-based editing capability.

Adobe previewed its concepts for integrating its Firefly generative AI technology into its video tools at NAB 2023, which include capabilities like generating visual and sound effects, animations, storyboards, captions and more from text prompts. The company says we'll start seeing Firefly in applications starting later in the year. My guess is it will be timed around Adobe's annual Creative Cloud conference, Adobe Max, which is usually in mid-October.

The company announced one of its coolest enhancements to Premiere Pro last week -- and by "cool" I mean "wow, this could save a vacation's-worth of production time" rather than "wow, this has never been done before" -- AI/ML-assisted text-based video editing. But the Firefly-driven capabilities skew more toward vision development rather than execution, promising faster, more automated cycling through potential looks, concepts and so on through all stages of video creation, production and post-production.

According to Adobe, it sees the technology enabling people to:

- Change color schemes, weather, sun angle and more using various levels of specificity in your text prompts

- Create sounds and music based on directions for the mood you're trying to set or the content of the scene

- Generation style and animations for type and graphics from text directions

- Use imported scripts as prompts to visualize scenes and create storyboards as well as recommend b-roll clips and automatically generate captions

Adobe explicitly stated at launch that "Firefly will be integrated directly into Creative Cloud, Document Cloud, Experience Cloud and Adobe Express workflows," and Adobe says this rollout will be limited to the Creative Cloud video and audio applications. The mockup interface looks very Rush-like, which could mean they're just proof of concepts, but it could also mean that it will be modal, similar to the way, say, the Neural Filters or Select and Mask are in Photoshop.