It is surprisingly easy to bamboozle a self-driving car

Researchers confused cameras into misinterpreting signs with a few small tricks and a lot of math.

Sure, you might giggle every time you see a stop sign converted to say, "STOP, Hammer Time," but if that sign caused your self-driving car to blow through an intersection, you might not enjoy it so much.

Researchers at the University of Washington and other schools published a paper, "Robust Physical-World Attacks on Machine Learning Models," which shows how some slight tweaks to standard road signs could cause a self-driving car's systems to freak out and pretend the sign is a different one.

Researchers created two different sorts of attacks on a self-driving car's systems, using a whole lot of math and a little bit of printing. It involves gaining access to a car's classifier, a part within its vision system that tells the car what an object is and what it means to the vehicle. If the car's cameras detect an object, it's up to the classifier to determine how the car handles said object.

Your band's stickers probably won't have the same effect, unless your drummer is also a computer engineer and kind of a jerk.

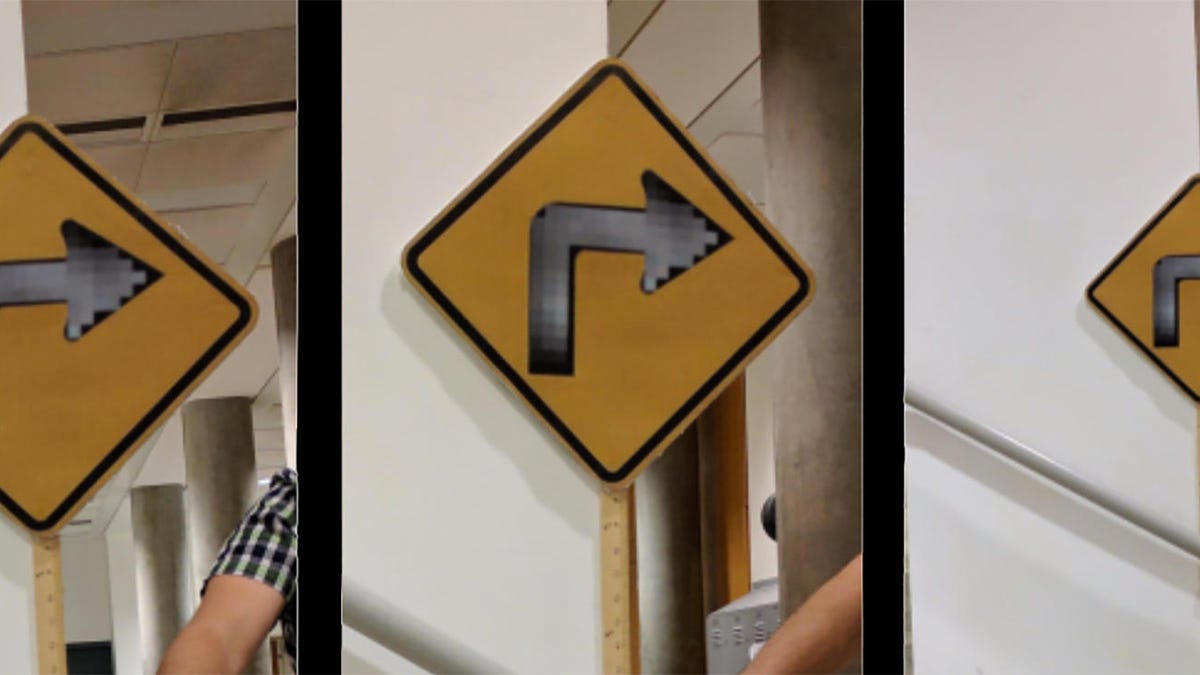

The first kind of attack involves printing out a life-size copy of a road sign and taping it over an existing one. A right-turn sign with a sort of grayed-out, pixelated arrow confused the system into believing it was either a stop sign or an added-lane sign, but not a right-turn sign. Thus, a confused vehicle may attempt to stop when it does not need to, causing additional confusion on the road.

The second kind of attack involved small stickers that give off a sort of abstract-art look. These rectangular stickers, in black and white, tricked the system into believing the stop sign was a 45-mph speed limit sign. It should be fairly obvious that nothing good can come from telling a car to hustle through an intersection at speed, as opposed to stopping like usual.

Of course, this all hinges on whether or not malicious parties have access to a vehicle system's classifier, which may be the same across different automakers if they all purchase their systems from a single supplier. But one of the researchers, speaking to Car and Driver, told the outlet that someone clever enough to probe the system in the right ways could potentially reverse-engineer a system and figure out how to trick it without having access to the classifier in question.

Thankfully, self-driving cars aren't rolling out tomorrow, so there's no need to incite mass panic. There are some ways around this already, including backup detection systems or something as simple as common sense, since you're probably not going to see a 45-mph speed limit sign in the middle of a tiny suburban avenue.

So, for all the children who can't wait to print out "Speed Limit 150" signs in anticipation of autonomous vehicles, perhaps those efforts could be put to better use.