Why You Can Trust CNET

Why You Can Trust CNET Why facial recognition's racial bias problem is so hard to crack

Good luck if you're a woman or a darker-skinned person.

Jimmy Gomez is a California Democrat, a Harvard graduate and one of the few Hispanic lawmakers serving in the US House of Representatives.

But to Amazon's facial recognition system, he looks like a potential criminal.

Gomez was one of 28 US Congress members falsely matched with mugshots of people who've been arrested, as part of a test the American Civil Liberties Union ran last year of the Amazon Rekognition program.

Nearly 40 percent of the false matches by Amazon's tool, which is being used by police, involved people of color.

This is part of a CNET special report exploring the benefits and pitfalls of facial recognition.

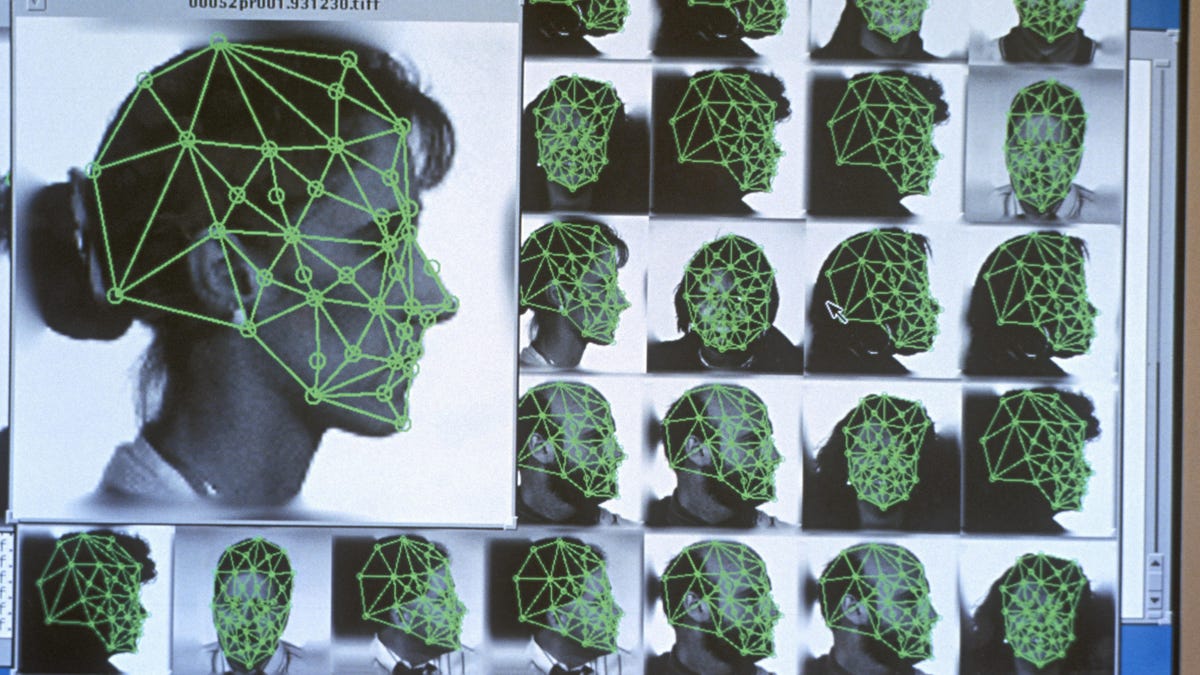

The findings reinforce a growing concern among civil liberties groups, lawmakers and even some tech firms that facial recognition could harm minorities as the technology becomes more mainstream. A form of the tech is already being used on iPhones and Android phones, and police, retailers, airports and schools are slowly coming around to it too. But studies have shown that facial recognition systems have a harder time identifying women and darker-skinned people, which could lead to disastrous false positives.

"This is an example of how the application of technology in the law enforcement space can cause harmful consequences for communities who are already overpoliced," said Jacob Snow, technology and civil liberties attorney for the ACLU of Northern California.

Facial recognition has its benefits. Police in Maryland used the technology to identify a suspect in a mass shooting at the Capital Gazette. In India, it's helped police identify nearly 3,000 missing children within four days. Facebook uses the technology to identify people in photos for the visually impaired. It's become a convenient way to unlock your smartphone.

But the technology isn't perfect, and there've been some embarrassing public blunders. Google Photos once labeled two black people as gorillas. In China, a woman claimed that her co-worker was able to unlock her iPhone X using Face ID. The stakes of being misidentified are heightened when law enforcement agencies use facial recognition to identify suspects in a crime or unmask people in a protest.

"When you're selling [this technology] to law enforcement to determine if that individual is wanted for a crime, that's a whole different ball game," said Gomez. "Now you're creating a situation where mistaken identity can lead to a deadly interaction between law enforcement and that person."

The lawmaker wasn't shocked by the ACLU's findings, noting that tech workers are often thinking more about how to make something work and not enough about how the tools they build will impact minorities.

Tech companies have responded to the criticism by improving the data used to train their facial recognition systems, but like civil rights activists, they're also calling for more government regulation to help safeguard the technology from being abused. One in two American adults is in a facial recognition network used by law enforcement, researchers at Georgetown Law School estimate.

Amazon pushed back against the ACLU study, arguing that the organization used the wrong settings when it ran the test.

"Machine learning is a very valuable tool to help law enforcement agencies, and while being concerned it's applied correctly, we should not throw away the oven because the temperature could be set wrong and burn the pizza," Matt Wood, general manager of artificial intelligence at Amazon Web Services, said in a blog post.

Recognition problem

There are various reasons why facial recognition services might have a harder time identifying minorities and women compared with white men.

Facial recognition technology has a harder time identifying people with darker skin, studies show.

Public photos that tech workers use to train computers to recognize faces could include more white people than minorities, said Clare Garvie, a senior associate at Georgetown Law School's Center on Privacy and Technology. If a company uses photos from a database of celebrities, for example, it would skew toward white people because minorities are underrepresented in Hollywood.

Engineers at tech companies, which are made up of mostly white men, might also be unwittingly designing the facial recognition systems to work better at identifying certain races, Garvie said. Studies have shown that people have a harder time recognizing faces of another race and that "cross-race bias" could be spilling into artificial intelligence. Then there are challenges dealing with the lack of color contrast on darker skin, or with women using makeup to hide wrinkles or wearing their hair differently, she added.

Facial recognition systems made by Microsoft, IBM and Face++ had a harder time identifying the gender of dark-skinned women like African-Americans compared with white men, according to a study conducted by researchers at the MIT Media Lab. The gender of 35 percent of dark-skinned women was misidentified compared with 1 percent of light-skinned men such as Caucasians.

Another study by MIT, released in January, showed that Amazon's facial recognition technology had an even harder time than tools by Microsoft or IBM identifying the gender of dark-skinned women.

The role of tech companies

Amazon disputed the results of the MIT study, and a spokeswoman pointed to a blog post that called the research "misleading." Researchers used "facial analysis" that identifies characteristics of a face such as gender or a smile, not facial recognition that matches a person's face to similar faces in photos or videos.

"Facial analysis and facial recognition are completely different in terms of the underlying technology and the data used to train them," Wood said in a blog post about the MIT study. "Trying to use facial analysis to gauge the accuracy of facial recognition is ill-advised, as it's not the intended algorithm for that purpose."

That's not to say the tech giants aren't thinking about racial bias.

Microsoft, which offers a facial recognition tool through Azure Cognitive Services, said last year that it reduced the error rates for identifying women and darker-skinned men by up to 20 times.

IBM made a million-face dataset to help reduce bias in facial recognition technology.

A spokesperson for Facebook, which uses facial recognition to tag users in photos, said that the company makes sure the data it uses is "balanced and reflect the diversity of the population of Facebook." Google pointed to principles it published about artificial intelligence, which include a prohibition against "creating or reinforcing unfair bias."

Aiming to advance the study of fairness and accuracy in facial recognition, IBM released a data set for researchers in January called Diversity in Faces, which looks at more than just skin tone, age and gender. The data includes 1 million images of human faces, annotated with tags such as face symmetry, nose length and forehead height.

"We have all these subjective and loose notions of what diversity means," said John Smith, lead scientist of Diversity in Faces at IBM. "So the intention for IBM to create this data set was to dig into the science of how can we really measure the diversity of faces."

The company, which collected the images from the photo site Flickr, faced criticism this month from some photographers, experts and activists for not informing people their images were being used to improve facial recognition technology. In response, IBM said it takes privacy seriously and users could opt out of the data set.

Amazon has said that it uses training data that reflects diversity and that it's educating customers about best practices. In February, it released guidelines it says lawmakers should take into account as they consider regulation.

"There should be open, honest and earnest dialogue among all parties involved to ensure that the technology is applied appropriately and is continuously enhanced," Michael Punke, Amazon's vice president of global public policy, said in a blog post.

Clear rules needed

Even as tech companies strive to improve the accuracy of their facial recognition technology, concerns that the tools could be used to discriminate against immigrants or minorities aren't going away. In part that's because people still wrestle with bias in their personal lives.

Law enforcement and government could still use the technology to identify political protestors or track immigrants, putting their freedom at risk, civil rights groups and experts argue.

US Rep. Jimmy Gomez, a California Democrat, was one of 28 US Congress members falsely matched by Amazon's facial recognition system with mugshots of people who've been arrested, a study by the ACLU showed.

"A perfectly accurate system also becomes an incredibly powerful surveillance tool," Garvie said.

Civil rights groups and tech firms are calling for the government to step in.

"The only effective way to manage the use of technology by a government is for the government proactively to manage this use itself," Microsoft President Brad Smith wrote in a blog post in July. "And if there are concerns about how a technology will be deployed more broadly across society, the only way to regulate this broad use is for the government to do so."

The ACLU has called on lawmakers to temporarily prohibit law enforcement from using facial recognition technology. Civil rights groups have also sent a letter to Amazon asking that it stop providing Rekognition to the government.

Some lawmakers and tech firms, such as Amazon, have asked the National Institute of Standards and Technology, which evaluates facial recognition technologies, to endorse industry standards and ethical best practices for racial bias testing of facial recognition.

For lawmakers like Gomez, the work has only begun.

"I'm not against Amazon," he said. "But when it comes to a new technology that can have a profound impact on people's lives -- their privacy, their civil liberties -- it raises a lot of questions."