Snapchat's newest AR effects work with Apple's iPhone 12 and iPad lidar

Lens Studio is updated to allow world-scanning effects, and more lenses could come.

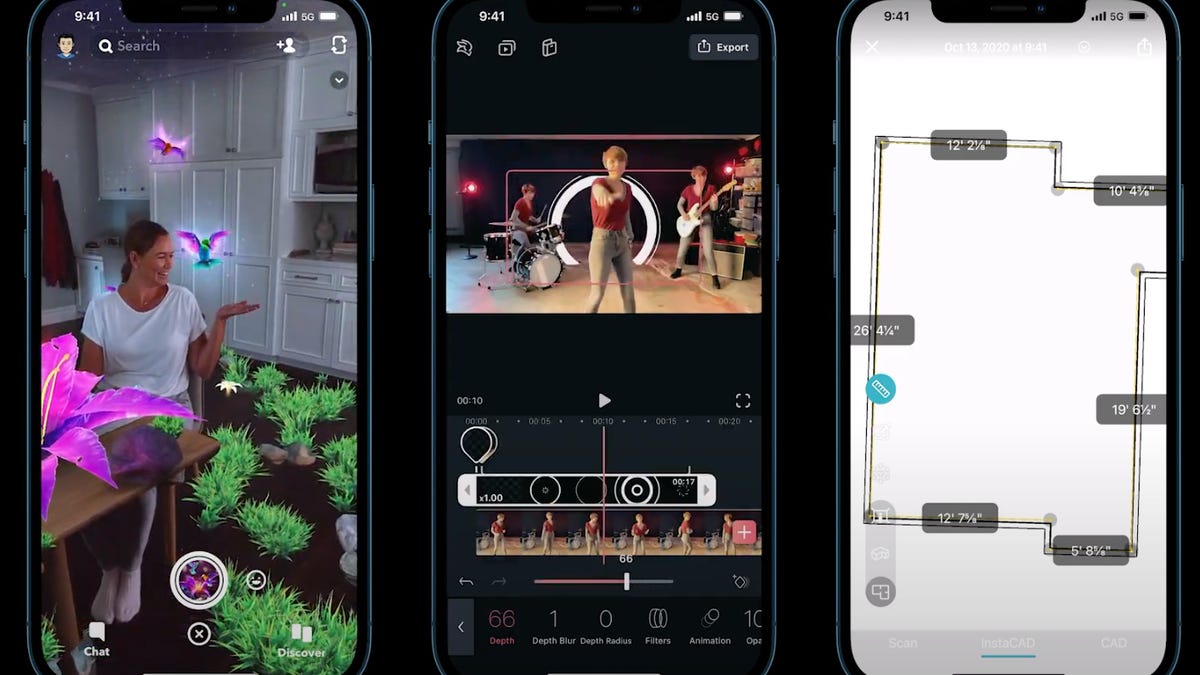

Snapchat's next wave of lenses could start adopting a lot more depth-sensing using the iPhone 12 Pro's lidar.

The new iPhone 12 phones have improved cameras and 5G, but the iPhone 12 Pro models add 3D scanning and more advanced augmented reality via added lidar sensors. Snapchat is already updating its Lens Studio software for AR creators to take advantage of the iPhone 12 Pro's lidar, anticipating a new wave of apps that could scan the world in a lot more detail.

Apple already put lidar depth sensing on the spring iPad Pro update, which can measure and map space up to a few meters away from the sensor. Apple's ARKit tools in iOS 14 already allow for lidar to expand on Apple's AR capabilities. Snapchat didn't build tools for that then, however, because Snap is largely phone-based. Still, Snapchat has already been building out ways to scan the world and overlay AR effects in real world locations before the iPhone 12 Pro's lidar, and has also worked with Google's ARCore depth sensing capabilities to develop similar ideas on Android.

Snap's new AR tools in the newest version of Lens Studio also work with the 2020 iPad Pro, and can be previewed to simulate the effects that Apple's lidar-equipped devices would enable.

Snap's Camera Platform SVP Eitan Pilipski spoke to CNET about the updates, and sees lidar as being more accurate, and continuing to build out a marriage between algorithms and new sensors. Pilipski sees depth sensing as "very foundational" to where Snap is going next, mainly to more world-scanning tech as opposed to face filters. Snap's already explored some of these ideas before with the company's Spectacles smartglasses, but the added range of lidar could push the envelope further.

The number of iPhone 12 Pro models out in the wild will also likely be a lot larger than the number of 2020 iPad Pro devices sold, which is another key part of the picture here. AR headsets like the HoloLens 2 and Magic Leap are few and far between, and targeted at business use, while the iPhone 12 Pro looks set to be the most widespread room-scanning AR device. For that reason alone, it could end up being a driving force for more AR creative tools. But in Snapchat's case, incorporating lidar also seems like one more tool in a depth-sensing toolbox.