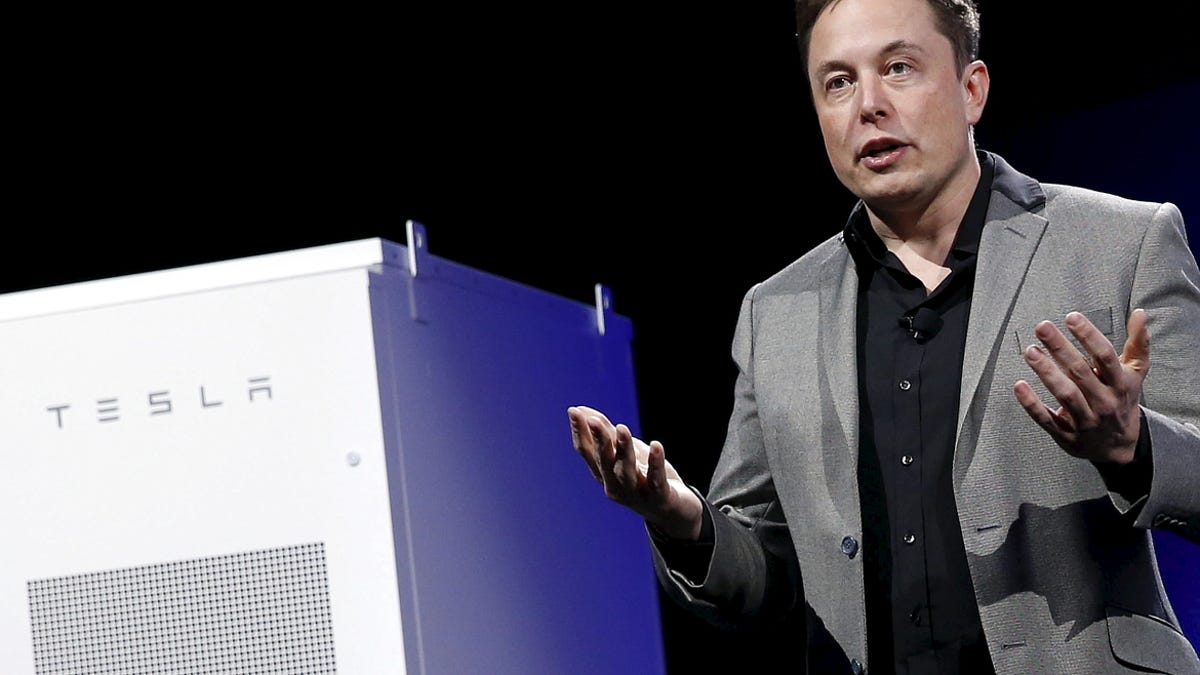

Elon Musk helps fund AI to protect humanity

OpenAI, a research group launched with $1 billion from Elon Musk, Sam Altman and Peter Thiel, aims to make sure artificial intelligence doesn't run amok.

As private companies strive to see if artificial intelligence can eventually exceed human intelligence, it might comfort some to know a new nonprofit has humanity's back.

OpenAI, a research company funded by $1 billion in donations from tech heavy-hitters Elon Musk, Sam Altman and Peter Thiel, launched Friday with the goal of advancing artificial intelligence, or AI. The field is promising but controversial: The worry is that machines equipped with supersmart technology could pose a danger to humans.

"It's hard to fathom how much human-level AI could benefit society, and it's equally hard to imagine how much it could damage society if built or used incorrectly," the organization said in a statement. "It's hard to predict when human-level AI might come within reach. When it does, it'll be important to have a leading research institution which can prioritize a good outcome for all over its own self-interest."

Musk, Altman and Thiel's interest in AI is important because the three already have a hand in building the future. Musk helped launch mobile payments as a PayPal co-founder in 1998. Now he's upending modern transportation as the CEO of electric-car maker Tesla and private space company SpaceX. As president of Y Combinator, Altman has helped launch startups including Airbnb, Dropbox and Twitch. Thiel, a major Silicon Valley investor, was a co-founder at PayPal and an early investor in Facebook. In other words, the technology they touch tends to make a splash.

"As a nonprofit, our aim is to build value for everyone rather than shareholders," the statement says. It also notes that researchers will be encouraged to share their work openly and all patents the organization gains will be shared with the world.

Research in AI, a term used for the ability of a machine, computer or system to exhibit humanlike intelligence, has been dominated lately by large tech companies such as Google and Facebook. Some industry watchers, including Musk and Microsoft co-founder Bill Gates, have grown concerned with how far AI can go and its potential dangers. In August 2014, Musk expressed fears that AI could be more dangerous than nuclear weapons. Even famed physicist Stephen Hawking has voiced reservations about AI.

"One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand," Hawking said in an article he co-wrote in May for The Independent. "Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all."

OpenAI isn't the first effort to ensure artificial intelligence doesn't get out of hand. AI experts around the globe signed an open letter issued in January by the Future of Life Institute that pledges to safely and carefully coordinate progress in the field. Signees included the co-founders of Deep Mind, the British AI company purchased by Google in January 2014; MIT professors; and tech industry experts like IBM's Watson supercomputer team and Microsoft Research. Musk also signed the letter.

The new nonprofit is led by Ilya Sutskever, a research scientist at Google, who will serve as its CTO and research director. It's co-chaired by Musk and Altman.

The bottom line: Keep the machines working in the best interests of the people who build and use them, the organization's announcement said. "We believe AI should be an extension of individual human wills."