Project Soli is the secret star of Google's Pixel 4 self-leak

How Soli's radar-powered tech could evolve into watches, smart home equipment and a lot more.

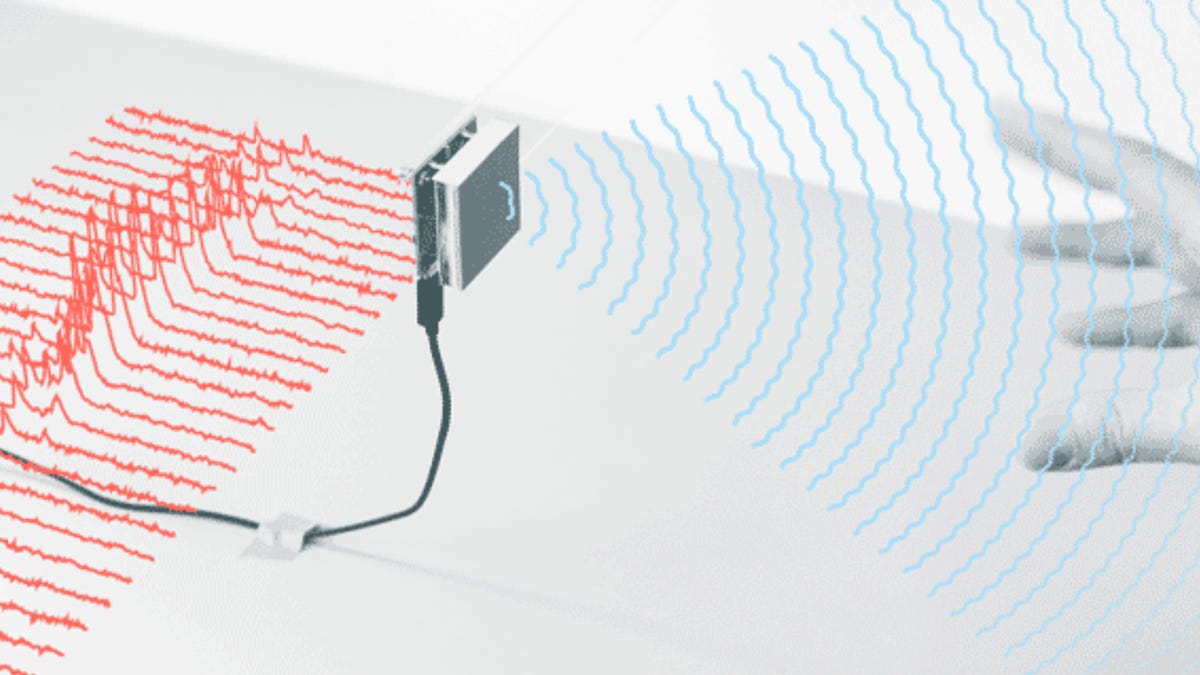

Soli is radar, and it could go well beyond the Pixel phone.

If you're looking for a new, weird thing to do with your next phone, consider hands-free use. Google's upcoming Pixel 4 is going to throw a hands-free twist to its front-camera array: In addition to facial recognition, it'll sense motion and hand gestures.

This technology, originally called Project Soli, uses radar to detect 3D movement in space. And that's in addition to adding new face-scanning infrared camera tech similar to Apple's iPhone X Face ID. Google's Soli tech was FCC approved earlier this year, and was expected in the Pixel 4 before Google confirmed it.

So what does this mean, and why is Google announcing all this news now? Google's upcoming October phone aims to pull off waves and air-swipes that will silence calls, skip songs and operate other shortcuts. But this could be about a lot more than just phones. In fact, it could be Google's attempt at building a new interface to its ambient computing ecosystem, as Pixel product manager Brandon Barbello hints in Google's latest Pixel-teasing blog post.

Soli is part of Google's ATAP (Advanced Technologies and Projects Group), which also created the smart fabric Project Jacquard, Google's first phone-based AR tech, Tango, and the modular Project Ara phone that never ended up happening.

If you want to know more about what Google envisions Soli being next, here's a good place to start.

No camera required

Even though the Pixel 4 has a full new front-facing camera array that uses an infrared dot projector to scan faces, just like the iPhone X, Soli's radar chip doesn't need a camera to do its work. In fact, Project Soli first debuted back in 2015 at one of Google's I/O developer conferences, where I got to see it in a brief demo. Back then, it was being used to control sound with finger gestures in the air, no remote needed.

The Soli radar chip is just one of the parts of the Pixel 4 front-facing array.

You could technically use the iPhone X front-facing TrueDepth camera, or the Pixel 4's similar infrared-equipped camera to recognize hand gestures. But you'd need the camera to be on and looking. The advantage of Soli is that in theory it could be working at any time.

And, working without a camera means it could work in devices that could stay camera-free and still allow motion control. This could mean that you wouldn't need to stud cameras in all your connected home tech.

It could work through clothes or in the dark

Google's Soli page talks about how the radar tech in the Soli chip can work through fabric. On a phone, maybe it could work through pants? Apple's Face ID already works in the dark, but Soli's ability to work through certain materials (it's radar) could make it a candidate to embed in walls, dashboards or other objects. It seems like the perfect tech to put in a light switch or door panel.

Smart home devices next

I think about Soli, and I think about Google Home. If I could combine voice with a gesture, maybe I could invoke a command without saying "hey Google." Or I could lower lights by raising and lowering my hand. Or do that with volume.

Google already demonstrated Soli working with a smart speaker back in 2016. In fact, that was the first killer use case for Soli. Now that it's emerging in a phone, putting it in speakers -- or even a Nest -- seems like an obvious move.

A Pixel watch, screen-free wearables or clothes

Google's other demo of Soli, back at its 2016 developer event, involved controlling a watch hands-free, with fine-tuned gestures above the watch display. Watch the video above. Maybe that seems silly when you already have a watch display to touch, but imagine a Pixel watch that used Soli for simple gesture controls that work near the watch: You could use it for answering calls, silencing, launching a workout or who knows what.

Or maybe it could lead to wearables with no displays, where Soli is used as the interface. My mind leaps to Project Jacquard, a smart fabric that launched in Levi's denim jackets. Jacquard needs to be touched to work. Could future smart clothing work with hands-free gestures? Who knows.

Car dashboards

I'm already uncertain about using voice when I'm driving, but it's better than operating a touchscreen. Soli's wider-range hand gestures seem like they could be a useful way to control things without looking. I say this with a lot of hesitation, since we don't know enough about driving safety while using something like Soli. But it seems like it could make it possible to operate volume controls or accept a new route suggestion.

Perhaps a killer tool for AR headsets

One thing that leapt out to me about Soli was its ability to recognize finger gestures at a distance. That's exactly what many mixed-reality headsets such as Microsoft Hololens have been striving for. Hand gestures are still a holy grail of sorts for AR, and Leap Motion's never-fulfilled Project North Star headset prototype was trying to solve for hand gestures, too. I don't know how refined Soli's 3D sense is, but if Google is looking for a future evolution of the still-around-and-evolving Google Glass that strives to work hands into the equation, I'd be curious to know if Soli could be an answer.

I'm still skeptical

Air gestures sound like the future and I love future tech. But I've seen attempts at hands-free control systems fail before. The Microsoft Kinect, which allowed me to totally control a game console with my hands from my sofa, never ended up being a substitute for just picking up a game controller or TV remote. The LG G8 ThinQ phone added camera-based air gestures to operate volume and music playback by hovering over the phone, but pulling off the moves felt like performing a complicated magic trick. Samsung's had gesture controls in phones and TVs for years, but do you even remember them?

For air gestures to work, they'll need to feel precise and always responsive. Any failure and I'll feel like I'm flailing. And if that happens, even a few times, I'll go back to voice, or touch or whatever else works better.

What I can remember of the Soli demo I saw a long time ago was that it worked.

While the Pixel 4's going to have a lot of new tech onboard when it hits in October, Project Soli seems like a test drive of a platform that could end up spreading out beyond phones.