Computational photography: Why Google says its Pixel 4 camera will be so damn good

What’s the secret behind the best phone cameras? Something called "computational photography." It's high-tech, but Google takes inspiration from historic Italian painters.

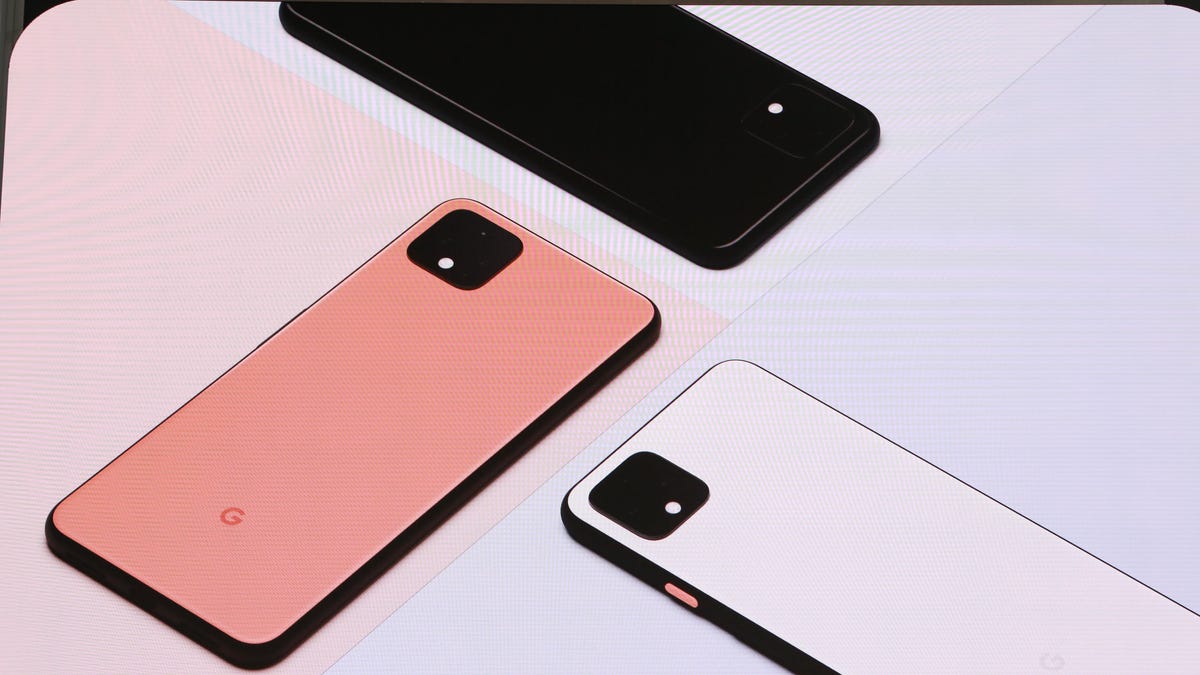

The Pixel 4 has three cameras and uses computational photography under the hood.

When Google announced the camera of its new Pixel 4 on Tuesday, it boasted about the computational photography that makes the phone's photos even better, from low-light photography with Night Sight to improved portrait tools that identify and separate hairs and pet fur. You can even take photos of the stars. What makes it all possible is something called computational photography, which can improve your camera shots immeasurably, helping your phone match, and in some ways surpass, even expensive cameras.

Read more: Here's our in-depth Pixel 4 review and Pixel 4 XL review

Google isn't alone. Apple marketing chief Phil Schiller in September boasted that the iPhone 11's new computational photography abilities are "mad science."

But what exactly is computational photography?

In short, it's digital processing to get more out of your camera hardware -- for example, by improving color and lighting while pulling details out of the dark. That's really important given the limitations of the tiny image sensors and lenses in our phones, and the increasingly central role those cameras play in our lives.

Heard of terms like Apple's Night Mode and Google's Night Sight? Those modes that extract bright, detailed shots out of difficult dim conditions are computational photography at work. But it's showing up everywhere. It's even built into Phase One's $57,000 medium-format digital cameras.

First steps: HDR and panoramas

One early computational photography benefit is called HDR, short for high dynamic range. Small sensors aren't very sensitive, which makes them struggle with both bright and dim areas in a scene. But by taking two or more photos at different brightness levels and then merging the shots into a single photo, a digital camera can approximate a much higher dynamic range. In short, you can see more details in both bright highlights and dark shadows.

There are drawbacks. Sometimes HDR shots look artificial. You can get artifacts when subjects move from one frame to the next. But the fast electronics and better algorithms in our phones have steadily improved the approach since Apple introduced HDR with the iPhone 4 in 2010. HDR is now the default mode for most phone cameras.

Google took HDR to the next level with its HDR Plus approach. Instead of combining photos taken at dark, ordinary and bright exposures, it captured a larger number of dark, underexposed frames. Artfully stacking these shots together let it build up to the correct exposure, but the approach did a better job with bright areas, so blue skies looked blue instead of washed out. And it helps cuts down the color speckles called noise that can mar an image.

Apple embraced the same idea, Smart HDR, in the iPhone XS generation in 2018.

Panorama stitching, too, is a form of computational photography. Joining a collection of side-by-side shots lets your phone build one immersive, superwide image. When you consider all the subtleties of matching exposure, colors and scenery, it can be a pretty sophisticated process. Smartphones these days let you build panoramas just by sweeping your phone from one side of the scene to the other.

Google Pixel phones offer a portrait mode to blur backgrounds. The phone judges depth with machine learning and a specially adapted image sensor.

Seeing in 3D

Another major computational photography technique is seeing in 3D. Apple uses dual cameras to see the world in stereo, just like you can because your eyes are a few inches apart. Google, with only one main camera on its Pixel 3, has used image sensor tricks and AI algorithms to figure out how far away elements of a scene are.

The biggest benefit is portrait mode, the effect that shows a subject in sharp focus but blurs the background into that creamy smoothness -- "nice bokeh," in photography jargon.

It's what high-end SLRs with big, expensive lenses are famous for. What SLRs do with physics, phones do with math. First they turn their 3D data into what's called a depth map, a version of the scene that knows how far away each pixel in the photo is from the camera. Pixels that are part of the subject up close stay sharp, but pixels behind are blurred with their neighbors.

Google's Pixel 4 gathers stereoscopic data from two separate measurements -- the distance from one side of the lens on the main camera to the other, plus the distance from the main camera to the telephoto camera. The first helps with close subjects, the second with ones more distant, and the combination of both helps improve tricky photo elements like flyaway hair.

Portrait mode technology can be used for other purposes. It's also how Apple enables its studio lighting effect, which revamps photos so it looks like a person is standing in front of a black or white screen.

Depth information also can help break down a scene into segments so your phone can do things like better match out-of-kilter colors in shady and bright areas. Google doesn't do that, at least not yet, but it's raised the idea as interesting.

With a computational photography feature called Night Sight, Google's Pixel 3 smartphone can take a photo that challenges a shot from a $4,000 Canon 5D Mark IV SLR, below. The Canon's larger sensor outperforms the phone's, but the phone combines several shots to reduce noise and improve color.

Night vision

One happy byproduct of the HDR Plus approach was Night Sight, introduced on the Google Pixel 3 in 2018. It used the same technology -- picking a steady master image and layering on several other frames to build one bright exposure.

Apple followed suit in 2019 with Night Mode on the iPhone 11 and 11 Pro phones.

These modes address a major shortcoming of phone photography: blurry or dark photos taken at bars, restaurants, parties and even ordinary indoor situations where light is scarce. In real-world photography, you can't count on bright sunlight.

Night modes have also opened up new avenues for creative expression. They're great for urban streetscapes with neon lights, especially if you've got helpful rain to make roads reflect all the color.

The Pixel 4 takes this to a new extreme with an astrophotography mode that blends up to 16 shots, each 15 seconds long, to capture the stars and Milky Way.

Super resolution

One area where Google lagged Apple's top-end phones was zooming in to distant subjects. Apple had an entire extra camera with a longer focal length. But Google used a couple of clever computational photography tricks that closed the gap.

The first is called super resolution. It relies on a fundamental improvement to a core digital camera process called demosaicing. When your camera takes a photo, it captures only red, green or blue data for each pixel. Demosaicing fills in the missing color data so each pixel has values for all three color components.

Google's Pixel 3 and Pixel 4 count on the fact that your hands wobble a bit when taking photos. That lets the camera figure out the true red, green and blue data for each element of the scene without demosaicing. And that better source data means Google can digitally zoom in to photos better than with the usual methods. Google calls it Super Res Zoom. (In general, optical zoom, like with a zoom lens or second camera, produces superior results than digital zoom.)

Super Res Zoom on the Pixel 4 benefits from a dedicated telephoto camera. Even though its focal length is only 1.85x that of the main camera, Super Res Zoom offers sharpness as good as a 3x optical lens, Google said.

On top of the super resolution technique, Google added a technology called RAISR to squeeze out even more image quality. Here, Google computers examined countless photos ahead of time to train an AI model on what details are likely to match coarser features. In other words, it's using patterns spotted in other photos so software can zoom in farther than a camera can physically.

iPhone's Deep Fusion

New with the iPhone 11 this year is Apple's Deep Fusion, a more sophisticated variation of the same multiphoto approach in low to medium light. It takes four pairs of images -- four long exposures and four short -- and then one longer-exposure shot. It finds the best combinations, analyzes the shots to figure out what kind of subject matter it should optimize for, then marries the different frames together.

The Deep Fusion feature is what prompted Schiller to boast of the iPhone 11's "computational photography mad science." But it won't arrive until iOS 13.2, which is in beta testing now.

Where does computational photography fall short?

Computational photography is useful, but the limits of hardware and the laws of physics still matter in photography. Stitching together shots into panoramas and digitally zooming are all well and good, but smartphones with cameras have a better foundation for computational photography.

That's one reason Apple added new ultrawide cameras to the iPhone 11 and 11 Pro this year and the Pixel 4 is rumored to be getting a new telephoto lens. And it's why the Huawei P30 Pro and Oppo Reno 10X Zoom have 5x "periscope" telephoto lenses.

You can do only so much with software.

Laying the groundwork

Computer processing arrived with the very first digital cameras. It's so basic and essential that we don't even call it computational photography -- but it's still important, and happily, still improving.

First, there's demosaicing to fill in missing color data, a process that's easy with uniform regions like blue skies but hard with fine detail like hair. There's white balance, in which the camera tries to compensate for things like blue-toned shadows or orange-toned incandescent lightbulbs. Sharpening makes edges crisper, tone curves make a nice balance of dark and light shades, saturation makes colors pop, and noise reduction gets rid of the color speckles that mar images shot in dim conditions.

Long before the cutting-edge stuff happens, computers do a lot more work than film ever did.

But can you still call it a photograph?

In the olden days, you'd take a photo by exposing light-sensitive film to a scene. Any fiddling with photos was a laborious effort in the darkroom. Digital photos are far more mutable, and computational photography takes manipulation to a new level far beyond that.

Google brightens the exposure on human subjects and gives them smoother skin. HDR Plus and Deep Fusion blend multiple shots of the same scene. Stitched panoramas made of multiple photos don't reflect a single moment in time.

So can you really call the results of computational photography a photo? Photojournalists and forensic investigators apply more rigorous standards, but most people will probably say yes, simply because it's mostly what your brain remembers when you tapped that shutter button.

Google explicitly makes aesthetic choices about the final images its phones produce. Interestingly, it takes inspiration from historic Italian painters. For years, it styled HDR+ results on the deep shadows and strong contrast of Caravaggio. With the Pixel 4, it chose to lighten the shadows to look more like the works of Renaissance painter Titian.

And it's smart to remember that the more computational photography is used, the more of a departure your shot will be from one fleeting instant of photons traveling into a camera lens. But computational photography is getting more important, so expect even more processing in years to come.

Originally published Oct. 9.

Updates, Oct. 15 and Oct. 16: Adds details from Google's Pixel 4 phones.