Bing AI Bungles Search Results at Times, Just Like Google

Expect search engines to tread carefully as tests reveal problems with AI language skills.

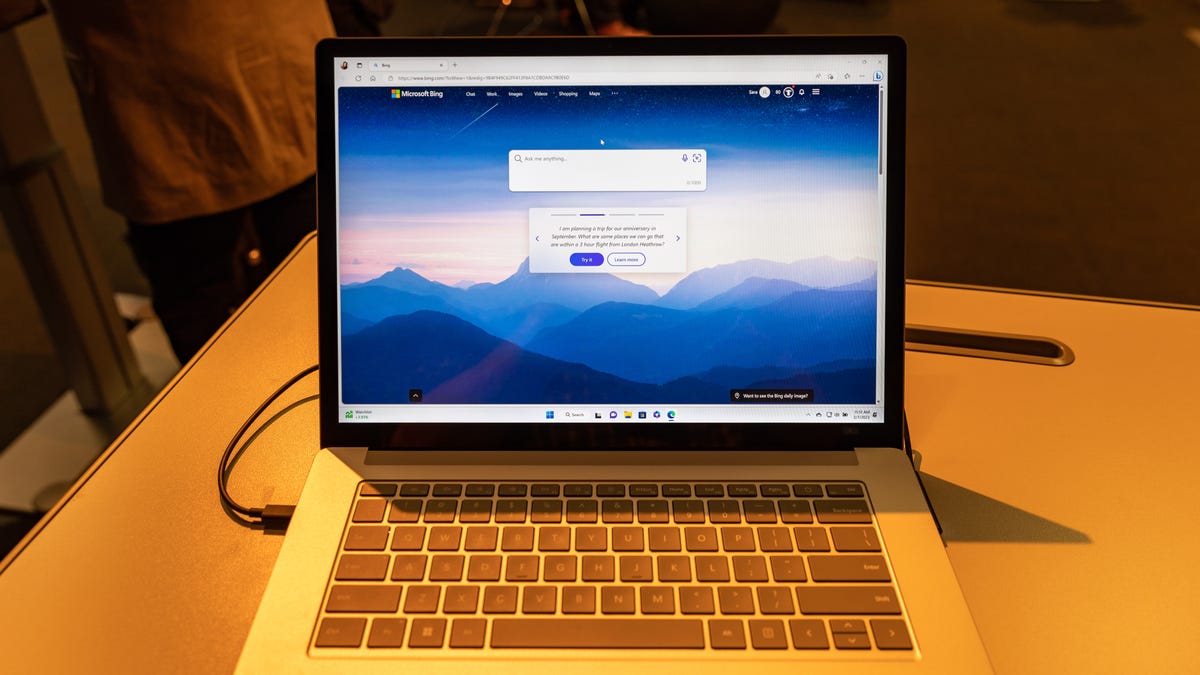

Microsoft search engine Bing is testing how to boost search results with new AI language processing technology.

A close reading of Bing's AI-boosted search results reveals that the website can make the same kinds of errors that are apparent in the ChatGPT technology foundation it uses and in Google's competing Bard.

A new version of Bing that's in limited testing employs large language model, or LLM, technology from OpenAI, the research lab Microsoft invested in and that grabbed the tech spotlight with its ChatGPT AI chatbot. ChatGPT and related technology, trained on enormous swaths of the internet, can produce remarkable results, but they don't truly know facts and they can make errors.

Bing tries to avoid such errors by "grounding" results with Microsoft's search technology, assessing the veracity and authority of source documents and offering links to sources, so people can evaluate the results on their own better. But AI and search engine researcher Dmitri Brereton and others have spotted errors in Bing's demo, including flawed financial data from a Gap quarterly earnings report.

It's a buzzkill moment for AI. The technology truly can produce remarkable and useful results, but the trouble is assessing when it isn't doing that. Expect more caution as the search engine industry tries to find the right formula.

Google felt the pain after a demo last week when its Bard tool, which isn't yet publicly available, produced erroneous information about the James Webb Space Telescope.

Microsoft offered a similar response Tuesday to what Google said about its gaffe: "We recognize that there is still work to be done and are expecting that the system may make mistakes during this preview period, which is why the feedback is critical so we can learn and help the models get better."

One basic problem is that large language models, even if trained using text like academic papers and Wikipedia entries that have passed some degree of scrutiny, don't necessarily assemble factual responses from that raw material.

As internet pioneer and Google researcher Vint Cerf said Monday, AI is "like a salad shooter," scattering facts all over the kitchen but not truly knowing what it's producing. "We are a long way away from the self-awareness we want," he said in a talk at the TechSurge Summit.

Summarizing documents might appear to be within AI's advanced language processing abilities, but constructing human-readable sentences without drawing in inappropriate information can be difficult. For example, in this request to summarize a spec sheet for Canon's new R8 mirrorless camera, Bing technology rattled off many features that actually are found in Canon's earlier R5.

Another high-profile computer scientist, former Stanford University Professor John Hennessy, was more bullish than Cerf, praising AI for its language skills in understanding search queries and generating results that often are correct. But he also cautioned, "It's always confident that it has the right answer even when it doesn't."

OpenAI itself offers the same warning. "ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging," the AI research lab said when it launched ChatGPT in November.

Editors' note: CNET is using an AI engine to create some personal finance explainers that are edited and fact-checked by our editors. For more, see this post.