AI, Frankenstein? Not so fast, experts say

It's easy to worry about what artificial intelligence will deal us. Some people are trying to sort things out before we get too far down the road.

Ask Apple's Siri digital assistant if she's evil, and she'll respond curtly, "Not really."

Repeat a famous line from the movie "2001: A Space Odyssey," in which a computer on a spaceship kills nearly all the human crew, and Siri groans. "Oh, not again."

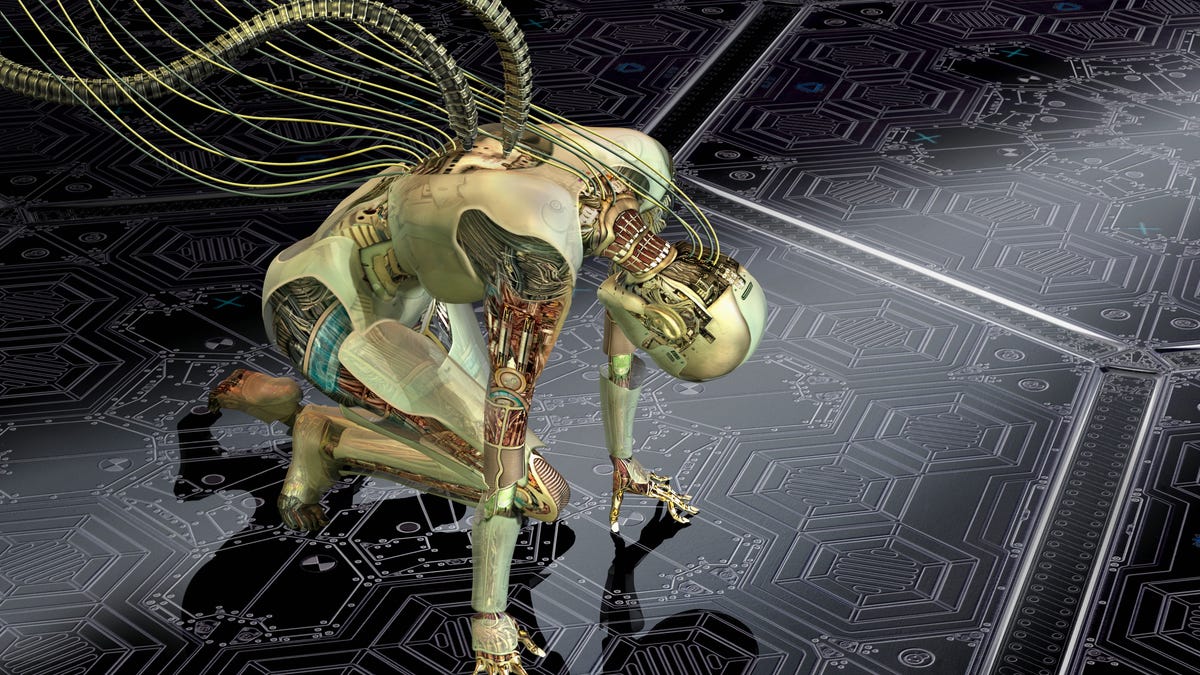

And who can blame her? We humans have a morbid fascination with machines rising up to wipe us out or to enslave us as cocooned, flesh-and-blood battery packs. You can see that vision of the future streaming over Netflix whenever you want.

Face it. For every lovable R2-D2 and C-3PO from the Star Wars universe, it's the dark side that seems to resonate most: the Cylons, who nearly eradicate human civilization in "Battlestar Galactica," or Skynet and its murderous robot warriors from the Terminator movies.

That gets under the skin of those working in the field of artificial intelligence, the science of computers that will someday resemble humans in their sweeping, world-altering capabilities, if not their physical form. AI is something we know, or think we know, better as a character from science fiction, grim or otherwise, than as an app in reality. The experts are having none of that.

"Most of the Hollywood scenarios that we see are completely implausible," says Yann LeCun, Facebook's director of AI research.

Philosopher and author Nick Bostrom is sounding the alarm over the dangers of artificial intelligence.

Still, there's a loud contingent of AI detractors out there, convinced that the emergence of highly sophisticated machines means grave dangers ahead. Science and technology luminaries from Stephen Hawking to Bill Gates to Elon Musk have warned us to proceed with caution or else -- even as researchers work to keep a grip on the AI reins.

Nick Bostrom, a philosopher and author of "Superintelligence: Paths, Dangers, Strategies," is at the forefront of sounding the alarm over the dangers. If machine intelligence can equal or surpass what we as humans can do, he and others say, then machines could replace us as the dominant force on Earth.

For Bostrom, the advent of superintelligent machines presents the "most important and daunting challenge humanity has ever faced."

In many small ways, artificial intelligence is already on the scene and getting smarter all the time. In March, an AI program from Google decisively defeated a human champion at Go, a complex board game long thought to be one of the toughest challenges for a computer. Facebook has built ever-more complex mathematics and logic algorithms to determine the news articles and baby photos it shows us. AI can manage your stock portfolio, help doctors diagnose various forms of cancer or point you toward a soulmate, which arguably means AI already has a hand in breeding humans.

Meanwhile, Google, Musk's Tesla Motors and others are a few short years away from giving us fully self-driving cars. The US Navy, which has already shown that a drone aircraft can decide how best to fly itself onto and off an aircraft carrier, is working hard to incorporate AI on the battlefield.

Computer scientists have been thinking hard about AI since a handful gathered for a seminal 1956 conference at Dartmouth College for which the term was coined. Back then, though, it was largely a theoretical endeavor.

No longer. In recent years, AI has made major advances on the backs of powerful computers and networks that can handle the techniques of "deep learning," a complex superstructure of algorithms that enables computers to process high-level abstractions -- that is, to think more like humans. Google, for instance, employed deep learning in 2012 when an array of computers studied 10 million images to figure out, on its own, what a cat is.

Other tech titans, including Microsoft, IBM and Facebook, are also throwing money and researchers at artificial intelligence, while Toyota recently announced a $1 billion investment in AI research. A 2014 study published by the Machine Intelligence Research Institute (MIRI) estimated that the number of academic papers devoted to AI published annually has grown by 50 percent every five years since 1995.

What happens when we get there?

With so much attention on AI, you'd think we'd have a better sense of what grand form it will eventually take and how much it will change our lives. The fact is, we don't. What we do know is that AI has the potential to radically alter human society.

It's not a stretch to imagine that computers will someday do most of our jobs. One day, too, AI-infused software may make a life-or-death decision for us -- say, an autonomous weapon choosing its own targets or a self-driving car that must decide between a head-on collision that would injure its passengers and a sudden swerve onto a crowded sidewalk.

Computers are based on logic, and there's a dangerous side to that. Imagine an AI that decides to cure cancer by eradicating all life on Earth. That's the scenario author Stuart Armstrong poses in his book "Smarter than Us." Ask AI to increase the GDP, and it may burn down several cities because, after all, rebuilding them would create jobs and increase spending.

"We are spending billions on getting there as fast as possible, with absolutely no idea what happens when we get there," says Stuart Russell, a computer science professor at the University of California, Berkeley, and co-author of the textbook "Artificial Intelligence: A Modern Approach."

What happens when AI-infused software controls our cars and cities?

The good news is that some people are trying to figure out answers to the tough questions before we get too far down the road.

Musk, who has called AI the "biggest existential threat" to humanity, ponied up $10 million to fund the Future of Life Institute for research aimed at "keeping AI beneficial." He has also joined five other Silicon Valley heavyweights, including LinkedIn founder Reid Hoffman, who collectively pledged $1 billion to found OpenAI, a nonprofit research organization dedicated to ensuring AI advancement occurs in a manner beneficial to the common good.

MIRI, based in a generic office building in Berkeley, is trying to answer that nagging question in our heads, spoken with an Arnold Schwarzenegger accent: What could happen if AI has access to vital internet systems or computerized weapons, with the ability to make high-level decisions?

"The basic safety concern for smarter-than-human AI systems is that it's difficult to fully specify correct goals for such systems," says Nate Soares, MIRI's executive director. "The danger with systems that have misspecified goals is that they're likely, by default, to have incentives to compete with humans for limited resources and control."

So yeah, there are survival-of-the-fittest concerns.

Looking at the bright side

There's also a positive side to the scale. AI could save countless lives that would otherwise be lost to diseases, hospital errors or careless human drivers, or it could help us figure out how to drive back climate change, says Eric Horvitz, founder of Stanford University's 100-year study on artificial intelligence and director of the Microsoft Research Lab in Redmond, Washington.

"It's quite possible that the upsides way outweigh the downsides, and the downsides we can address with careful consideration and proactive work," Horvitz says.

Not everyone thinks we need to worry so much about these issues now. Sam Altman, co-chairman of OpenAI, predicts we'll be in good shape if artificial intelligence is cultivated in a transparent way. That means decisions about its advance would happen with input from policymakers, academics and industry leaders rather than one company using AI to enhance profits, consolidate power or act with reckless abandon.

At its San Francisco office, OpenAI works to advance artificial intelligence in ways that benefit humanity as a whole.

Making the technology easily available is the way to make it safe, says Altman. "If you have the power widely distributed, then groups of good humans will band to stop the bad ones."

OpenAI plans to share its research with anyone interested, and it encourages others to do the same. In that spirit, Google last year announced that it would make TensorFlow, its second-generation AI software, open-source, or freely available to everyone, and soon it will be available on iPhones. Facebook, too, has said it would similarly make available the code for Torch, software that teaches computers how to think.

Facebook CEO Mark Zuckerberg is one of the AI positivists. "We should not be afraid of AI," he said in January in a Facebook post. "Instead, we should hope for the amazing amount of good it will do in the world."

For now, AI is good at sorting things, making predictions and powering through algorithms, but it struggles to think critically or solve problems you'd find in standardized tests. The Allen Institute for Artificial Intelligence recently devised a contest in which the computer program that scored the best on an eighth-grade science test would win $50,000 for its creator. They all failed. The best score was a 54 percent.

The lesson here is that an advanced AI will take a long time to develop "because of the way we build things," says Facebook's LeCun. "Most of the AI of the next couple decades will be very specialized."

Still, Siri will undoubtedly get smarter, these experts say, and groups such as MIRI and OpenAI will be keeping watch to make sure it is more of a BB-8 than a Skynet.

In the meantime, even alarmists such as Bostrom are eager to see how AI develops.

"I think it would be tragic," he says, "if humanity never got to develop machine superintelligence."