Facebook starts building AI with an ethical compass

The social network's engineers have a tool called Fairness Flow to find bias in their algorithms. Also: Facebook open-sourced an AI that plays StarCraft.

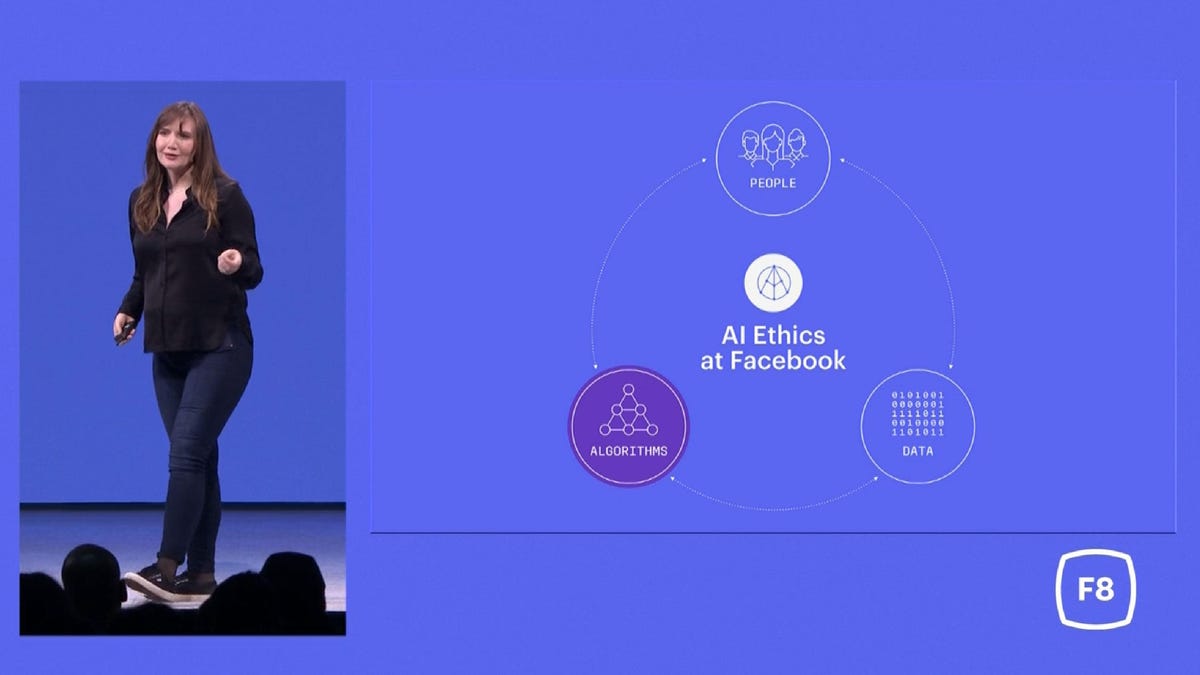

As Facebook's artificial intelligence technology gets smarter and more important to the social network's sprawling business, the company is working to keep its AI systems from ethical lapses.

The company has built a system called Fairness Flow that can measure for potential biases for or against particular groups of people, research scientist Isabel Kloumann said at Facebook's F8 conference on Wednesday.

"We wanted to ensure jobs recommendations weren't biased against some groups over others," Kloumann said, so it checks for differences in treating men versus women or people under 40 years old versus people older, she said. That's probably helpful given Silicon Valley's struggles to deal with sex and age discrimination.

It's a good time for Facebook to be working on its ethics smarts. The company is under fire for lapses of its own when it comes to protecting its 2 billion users' privacy and for letting Russians manipulate US elections through Facebook. Chief Executive Mark Zuckerberg on Tuesday pledged to do better, and on Wednesday, Facebook announced it's using AI to remove posts from its social network that involve nudity, graphic violence, terrorist content, hate speech, spam, fake accounts and suicide.

Fairness Flow began with hiring, but it's available for other operations now.

Avoiding biased AIs

"Now we're working to scale the Fairness Flow to evaluate the personal and societal implications of every product that we build. As a step in that direction, we've integrated the Fairness Flow into our internal machine learning platform," Kloumann said. "Any engineer can plug in to this technology and evaluate their algorithms for bias."

Bias is a problem for today's artificial intelligence technology and the machine learning processes behind it. AIs learn from real-world data, a process that produces spectacular results for things like image recognition but can let in biases in the original source material. For example, if training data shows more photos with women in kitchens than with men in them, then AIs can pick up that signal.

AI is a curiously academic part of the computing industry, as evidenced by things like Apple researchers' insistence they be allowed to publish research papers.

Other Facebook AI projects

Facebook has one of the top AI programs in tech, and the company is starting to show more of what it's up to. At F8, it touted several projects, most of them open-source software so others can retrace Facebook's steps, learn from them and perhaps improve on them -- although without Facebook's massive computing resources, it can be tough to keep up.

Among the other projects:

- TorchCraft, a system for learning how to play Blizzard Entertainment's StarCraft real-time strategy videogame.

- A related project, Elf, is good for AIs that play a broader range of games. Facebook also released a specific version, Elf OpenGo, based on Google's Alpha Go Zero AI that famously won at the strategy game Go. Elf OpenGo is now a professional-grade Go player, Facebook Chief Technology Officer Mike Schroepfer said.

- DensePose, which uses AI to figure out where human figures are in a video and to identify their 3D structure.

- The Translate project is designed to improve AI language translation, a hot area, where other powers like Google and Microsoft are active.

- The Muse project for more AIs better able to handle multiple languages in one key area these days, natural language processing.

- Work to use Instagram hashtags to improve the accuracy and detail of image recognition.

- An AI system that figures out the best way to place radio stations for high-speed wireless networks.

Many of the projects are detailed on the new Facebook.ai site the company launched Wednesday.

First published May 2, 12:11 p.m. PT.

Update, 1:04 p.m.: Adds details about other AI projects.

Solving for XX: The tech industry seeks to overcome outdated ideas about "women in tech."

Security: Stay up-to-date on the latest in breaches, hacks, fixes and all those cybersecurity issues that keep you up at night.