Why You Can Trust CNET

Why You Can Trust CNET Q&A: Robotics engineer aims to give robots a humane touch

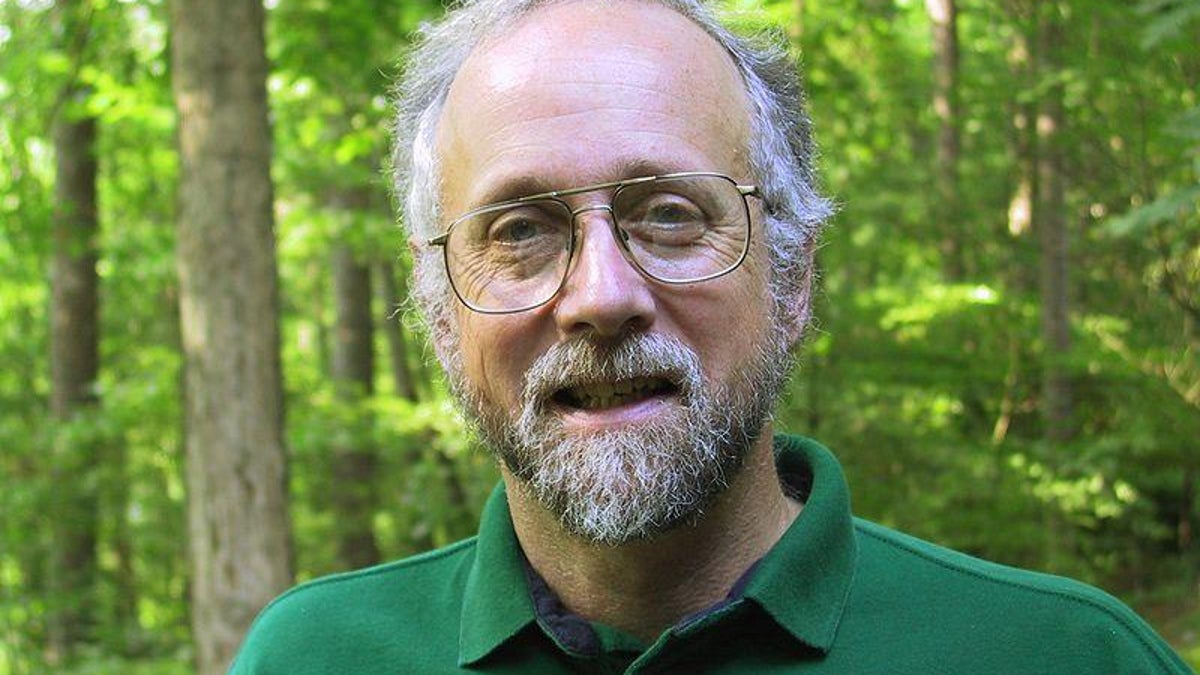

Ronald Arkin has created prototype software for the Army that could configure robots with a built-in "guilt system," potentially making them better than human soldiers at avoiding civilian casualties.

Can robots be more humane than humans in fighting wars? Robotics engineer Ronald Arkin of the Georgia Institute of Technology believes this is a not-too-distant possibility. He has just finished a three-year contract with the U.S. Army designing software to create ethical robots.

As robots are increasingly being used by the U.S. military, Arkin has devoted his lifework to configuring robots with a built-in "guilt system" that eventually could make them better at avoiding civilian casualties than human soldiers. These military robots would be embedded with internationally prescribed laws of war and rules of engagement, such as those in the Geneva Conventions.

Arkin talked with CNET News about how robots can be ethically programmed and some of the philosophical questions that come up when using machines in warfare. Below is an edited excerpt of our conversation.

Q: What made you first begin thinking about designing software to create ethical robots?

Arkin: I'd been working in robotics for almost 25 years and I noticed the successes that had been happening in the field. Progress had been steady and sure and it started to dawn on me that these systems are ready, willing, and able to begin going out into the battlefield on behalf of our soldiers. Then the question came up--what is the right ethical basis for these systems? How are we going to ensure that they could behave appropriately to the standards we set for our human war fighters?

In 2004, at the first international symposium on roboethics in Sanremo, Italy, we had speakers from the Vatican, the Geneva Conventions, the Pugwash Institute, and it became clear that this was a pressing problem. Trying to view myself as a responsible scientist, I felt it was important to do something about it and that got me embarked on this quest.

What do you mean by an ethical robot? How would a robot feel empathy?

Arkin: I didn't say it would feel empathy, I said ethical. Empathy is another issue and that is for a different domain. We are talking about battlefield robots in the work I am currently doing. That is not to say I'm not interested in those other questions and I hope to move my research, in the future, in that particular direction.

Right now, we are looking at designing systems that can comply with internationally prescribed laws of war and our own codes of conduct and rules of engagement. We've decided it is important to embed in these systems with the moral emotion of guilt. We use this as a means of downgrading the robots' ability to engage targets if it is acting in ways which exceed the predicted battle damage in certain circumstances.

You've written about a built-in "guilt system." Is this what you're talking about?

Arkin: We have incorporated a component called an "ethical adaptor" by studying the models of guilt that human beings have and embedding those within a robotic system. The whole purpose of this is very focused and what makes it tractable is that we're dealing with something called "bounded morality," which is understanding the particular limit of the situation that the robot is to operate in. We have thresholds established for analogs of guilt that cause the robot to eventually refuse to use certain classes of weapons systems (or refuse to use weapons entirely) if it gets to a point where the predictions it's making are unacceptable by its own standards.

You, the engineer, decide the ethics, right?

Arkin: We don't engineer the ethics; the ethics come from treaties that have been designed by lawyers and philosophers. These have been codified over thousands of years and now exist as international protocol. What we engineer is translating those laws and rules of engagement into actionable items that the robot can understand and work with.

Arkin: We actually just finished, as of (July 1), the three-year project we had for the U.S. Army, which was designing prototype software for the U.S. Army Research Office. This isn't software that is intended to go into the battlefield anytime soon--it is all proof of concept--we are striving to show that the systems can potentially function with an ethical basis. I believe our prototype design has demonstrated that.

Robot drones like land mine detectors are already used by the military, but are controlled by humans. How would an autonomous robot be different in the battlefield?

Arkin: Drones usually refer to unmanned aero vehicles. Let me make sure we're talking about the same sort of thing--you're talking about ground vehicles for detecting improvised explosive devices?

Either one, either air or land.

Arkin: Well, they'd be used in different ways. There are already existing autonomous systems that are either in development or have been deployed by the military. It's all a question of how you define autonomy. The trip-wire for how we talk about autonomy, in this context, is whether an autonomous system (after detecting a target) can engage that particular target without asking for any further human intervention at that particular point.

There is still a human in the loop when we tell a squad of soldiers to go into a building and take it using whatever force is necessary. That is still a high-level command structure, but the soldiers have the ability to engage targets on their own. With the increased battlefield tempo, things are moving much faster than they did 40 or 100 years ago, and it becomes harder for humans to make intelligent, rational decisions. As such, it is my contention that these systems, ultimately, can lead to a reduction in non-combatant fatalities over human level performance. That's not to say that I don't have the utmost respect for our war fighters in the battlefield, I most certainly do and I'm committed to provide them with the best technical equipment in support of their efforts as well.

In your writing you say robots can be more humane than humans in the battlefield, can you elaborate on this?

Arkin: Well, I say that's my thesis, it's not a conclusion at this point. I don't believe unmanned systems will be able to be perfectly ethical in the battlefield, but I am convinced (at this point in time) that they can potentially perform more ethically than human soldiers are capable of. I'm talking about wars 10 to 20 years down the field. Much more research and technology has to be developed for this vision to become a reality.

But, I believe it's an important avenue of pursuit for military research. So, if warfare is going to continue and if autonomous systems are ultimately going to be deployed, I believe it is crucial that we must have ethical systems in the battlefield. I believe that we can engineer systems that perform better than humans--we already have robots that are stronger than people, faster than people, and if you look at computers like Deep Blue we have robots that can be smarter than people.

I'm not talking about replacing a human soldier in all aspects; I'm talking about using these systems in limited circumstances such as counter sniper operations or taking buildings. Under those circumstances we can engineer enough morality into them that they may indeed do better than human beings can--that's the benchmark I'm working towards.

Arkin: Well, a lot of this has been sharpened by debates with my colleagues in philosophy, computer science, and computer professionals for social responsibility. There are a lot of things that could potentially go wrong. One of the big questions (much of this is derived from what's called "just war theory") is responsibility--if there is a war crime, someone must be to blame. We have worked hard within our system to make sure that responsibility attribution is as clear as possible using a component called the "responsibility advisor." To me, you can't say the robot did it; maybe it was the soldier who deployed it, the commanding officer, the manufacturer, the designer, the scientist (such as myself) who conceived of it, or the politicians that allowed this to be used. Somehow, responsibility must be attributed.

Another aspect is that technological advancement in the battlefield may make us more likely to enter into war. To me it is not unique to robotics--whenever you create something that gives you any kind of advantage, whether it is gun powder or a bow and arrow, the temptation to go off to war is more likely. Hopefully our nation has the wherewithal to be able to resist such temptation.

Some argue it can't be done right, period--it's just too hard for machines to discriminate. I would agree it's too hard to do it now. But with the advent of new sensors and network centric warfare where all this stuff is wired together along with the global information grid, I believe these systems will have more information available to them than any human soldier could possibly process and manage at a given point in time. Thus, they will be able to make better informed decisions.

The military is concerned with squad cohesion. What happens to the "band of brothers" effect if you have a robot working alongside with a squad of human soldiers, especially if it's one that might report back on moral infractions it observes with other soldiers in the squad? My contention is that if a robot can take a bullet for me, stick its head around a corner for me and cover my back better than Joe can, then maybe that is a small risk to take. Secondarily it can reduce the risk of human infractions in the battlefield by its mere presence.

The military may not be happy with a robot with the capability of refusing an order. So we have to design a system that can explain itself. With some reluctance, I have designed an override capability for the system. But the robot will still inform the user on all the potential ethical infractions that it believes it would be making, and thus force the responsibility on the human. Also, when that override is taken, the aberrant action could be sent immediately to command for after-action review.

There is a congressional mandate requiring that by 2010, one third of all operational deep-strike aircraft be unmanned and, by 2015, one third of all ground combat vehicles be unmanned. How soon could we see this autonomous robot software being used in the field?

Arkin: There is a distinction between unmanned systems and autonomous unmanned systems. That's the interesting thing about autonomy--it's kind of a slippery slope, decision making can be shared.

First, it can be under pure remote control by a human being. Next, there's mixed initiative where some of the decision making rests in the robot and some of it rests in the human being. Then there's semi-autonomy where the robot has certain functions and the human deals with it in a slightly different way. Finally, you can get more autonomous systems where the robot is tasked, it goes out and does its mission, and then returns (not unlike what you would expect from a pilot or a soldier).

The congressional mandate was marching orders for the Pentagon and the Pentagon took it very seriously. It's not an absolute requirement but significant progress is being made. Some of the systems are far more autonomous than others--for example the PackBot in Iraq is not very autonomous at all. It is used for finding improvised explosive devices by the roadside, many of these have saved the lives of our soldiers by taking the explosion on their behalf.

You just came out with a book called "Governing Lethal Behavior in Autonomous Robots." Do you want to explain in a bit more detail what it's about?

Arkin: Basically the book covers the space of this three-year project that I just finished. It deals with the basis, motivation, underlying philosophy, and opinions people have based on a survey we did for the Army on the use of lethal autonomous robots in the battlefield. It provides the mathematical formalisms underlying the approach we take and deals with how it is to be represented internally within the robotic system. And, most significantly, it describes several scenarios in which I believe these systems can be used effectively with some preliminary prototype results showing the progress we made in this period.