Can a social network curb racial profiling?

Nextdoor became a magnet for racial profiling. An update aims to reduce such incidents, but what responsibility does the community message board have to do so?

Technology enables people to be more social, more efficient, even more aware of the world. Sometimes, it also encourages behaviors many would prefer not to emphasize.

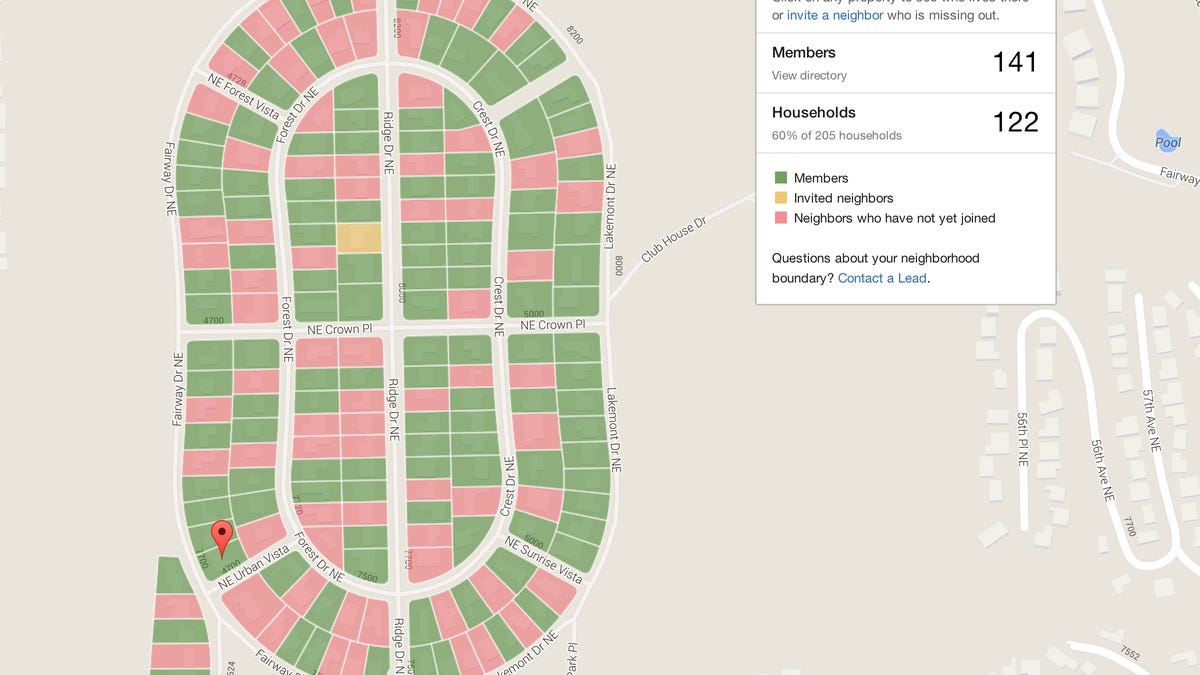

That's been the case for Nextdoor, the location-based social network meant for neighborhoods, where neighbors can post community-related messages to each other about lost dogs and whatever else.

It's also become a place where people, whether they realize it or not, can engage in racial profiling -- or expressing suspicions of behavior based on a person's race. For example, the East Bay Express reported last October that users posting to the site's crime and safety section warned of black individuals walking down the street or driving through an area. They have even questioned whether a black mail carrier was a burglar. The suspicion comes not from the activity, but from biases relating to the person's looks.

Nextdoor on Wednesday discussed the steps it's taking to minimize this behavior. When posting to crime and safety, users will have to fill out a form instead of writing in a text box. An algorithm will screen the posts for racially charged language and length. The form prompts them for at least two descriptors like hair color, height or approximate age. A post that doesn't pass muster won't go up unless the user revises it. During tests, this reduced racial profiling by 75 percent, the company said.

The new algorithm follows Nextdoor's introduction in November of a button that lets users flag posts they think racially profile someone.

There's a practical element to the structure change on crime and safety posts. Details like height, age, hair color, clothing and shoes are better suited to actually finding a person, should police need to be involved.

In part, all this has come about because Nextdoor found itself under pressure to take action. Nextdoor CEO Nirav Tolia also told CNET if the site's mission is to create community, something like racism only serves to divide.

"Even a single post can create so much damage, we felt we had a moral obligation to do something about it," Tolia said.

Before even getting to that new form, Nextdoor warns users to consider what they post, focusing on behavior. The message outright says identifying someone solely by race is not helpful.

Tech's role in dealing with racism

It's a tricky situation. The worst behaviors that come out online are ones that exist in the real world. Computers aren't racist. People are.

For David Robinson, principal at Upturn -- a technology firm in Washington that works on policy issues with civil rights groups -- that leads to a question: "What are the opportunities we have with these new technologies to address these longstanding problems in new ways?"

No one is obligated to do anything, exactly, but as is true in the offline world, there are behaviors dictated by the law, and behaviors dictated by what society deems acceptable.

A platform like Nextdoor has the chance to consider how to create structures where people can be good to each other, Robinson said. And that did occur to Tolia.

"While we didn't know if it was even possible to change people's behavior through features on the website, we needed to try," Tolia said.

But, Robinson sees a catch in the wider tech world. "The bigger question is, do the people running these platforms connect with these problems and take them seriously?"

Nextdoor isn't the first app to run into racism among users. A Harvard Business School study showed that users with a "distinctly African American name" are 16 percent less likely to be accepted on Airbnb. There's even a hashtag: #Airbnbwhileblack. Twitter regularly incurs criticism for not doing enough about racism and misogyny, among other things.

It's also not the first app to have to tell users not to do something socially or legally undesirable -- Pokemon Go warns players not to trespass when they log in.

Still, it often takes a tussle to get the attention of different platforms.

We live in a physical world that's hard to change, Robinson said. There's no overnight solution for problems like residential segregation. But technology platforms can change our world with a few lines of code.

"There's so much more freedom and flexibility that these platform operators have to change how our experiences work," he said.