Apple won't listen to Siri recordings without your permission anymore

It plans to continue its Siri review program in the fall, but it will only get audio recordings from people who opt in to provide that data.

Apple had been using Siri recordings to improve its artificial intelligence. It's now only being done by opt-in.

Your conversations with Siri won't be going directly to Apple anymore. The tech giant said Wednesday that it will stop retaining voice recordings by default.

Apple announced the Siri privacy changes after The Guardian reported in July that contractors were regularly listening to conversations from people giving voice commands. While the data is anonymized, those conversations included identifiable details like a person's name, medical records, drug deals and people having sex, according to the report.

In early August, Apple said it would be ending the contractor program, and prior to the announcement on Wednesday it let go at least 300 contractors in Europe who were part of that program.

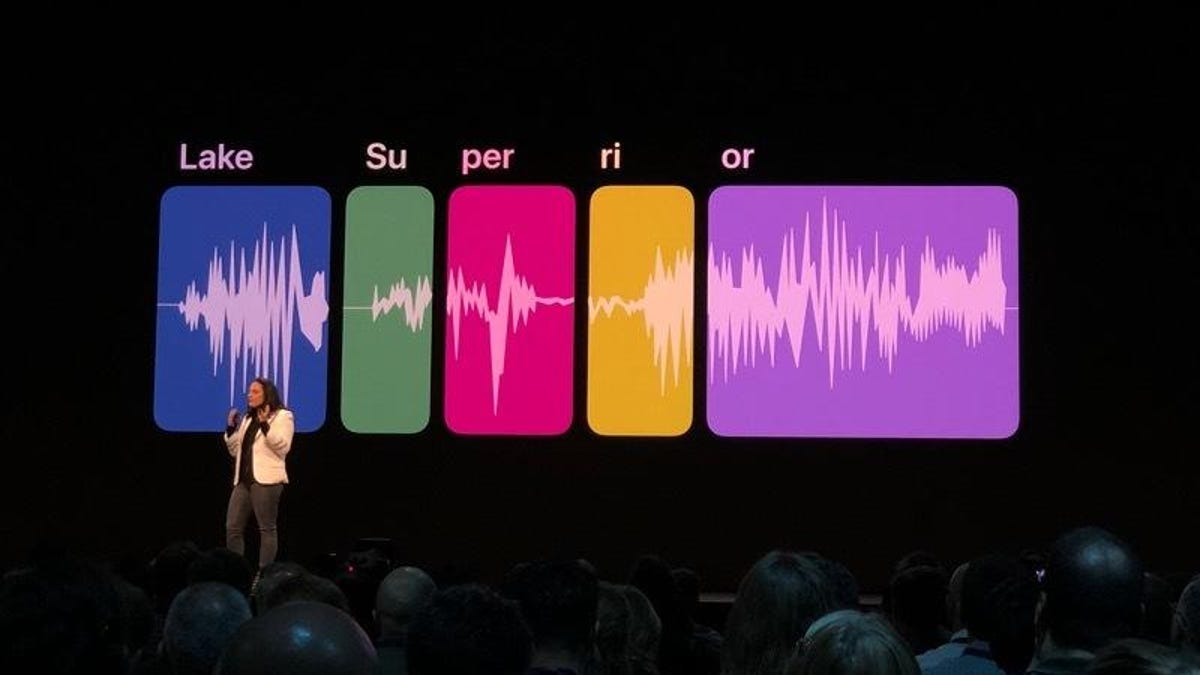

Apple had contractors listening to Siri conversations to augment the voice assistant's artificial intelligence. The human guidance helped improve the software through a process called "grading." Apple will continue to do that, but the audio is now only provided if users opt in, and Apple will not take audio recordings by default.

"We created Siri to help them get things done, faster and easier, without compromising their right to privacy. We are grateful to our users for their passion for Siri, and for pushing us to constantly improve," the company said in a statement.

Apple said it intends to continue that program in the fall, but only Apple employees will be able to listen to audio recordings. If the human reviewers determine a recording was accidentally triggered, they'll delete it.

Unlike with Google's and Amazon's voice assistants, Apple doesn't allow people to delete their audio recordings. That's because while Google and Amazon link recordings with a person's account on their services, Apple uses random identifiers for Siri recordings, which you can reset in your settings. In its statement, Apple said that it believes that feature was "unique among the digital assistants." The random identifier is discarded after six months, Apple said.

After Apple suspended its grading program, Amazon followed suit, allowing people to disable human reviews of Alexa recordings. Google did the same after it was ordered to stop by German regulators.

Humans listening in on conversations with digital assistants is a widespread practice in the tech industry. Microsoft, for instance, used contractors to listen to conversations with Xbox consoles, Motherboard reported. In August, Facebook paused human reviews of audio chats on Messenger after Bloomberg reported that the social network paid contractors to transcribe conversations.

To help improve Siri, Apple said it would continue using computer-generated transcripts instead.

Originally published at 8:15 a.m. PT.

Updated at 8:30 a.m. PT: To add more details and context on Apple's changes.