A new study discovers turning off a pleading robot isn't easy

Even though it's not technically alive, when a robot begs you to stay away from its off button, what will you do?

R2-D2, C-3PO, Rosie, Data, K-9 and Rachael the Replicant are all robots we've grown up loving from our favorite movies and TV shows. At some point, these famous pop culture robots seemed to exhibit every feeling from fear to love.

So when we're face to face with social robots programmed to entertain us, is it that hard to believe we have trouble flicking their off switch when they beg us not to?

That's exactly what a new study, published on July 31 in the open access journal PLOS One, wanted to find out.

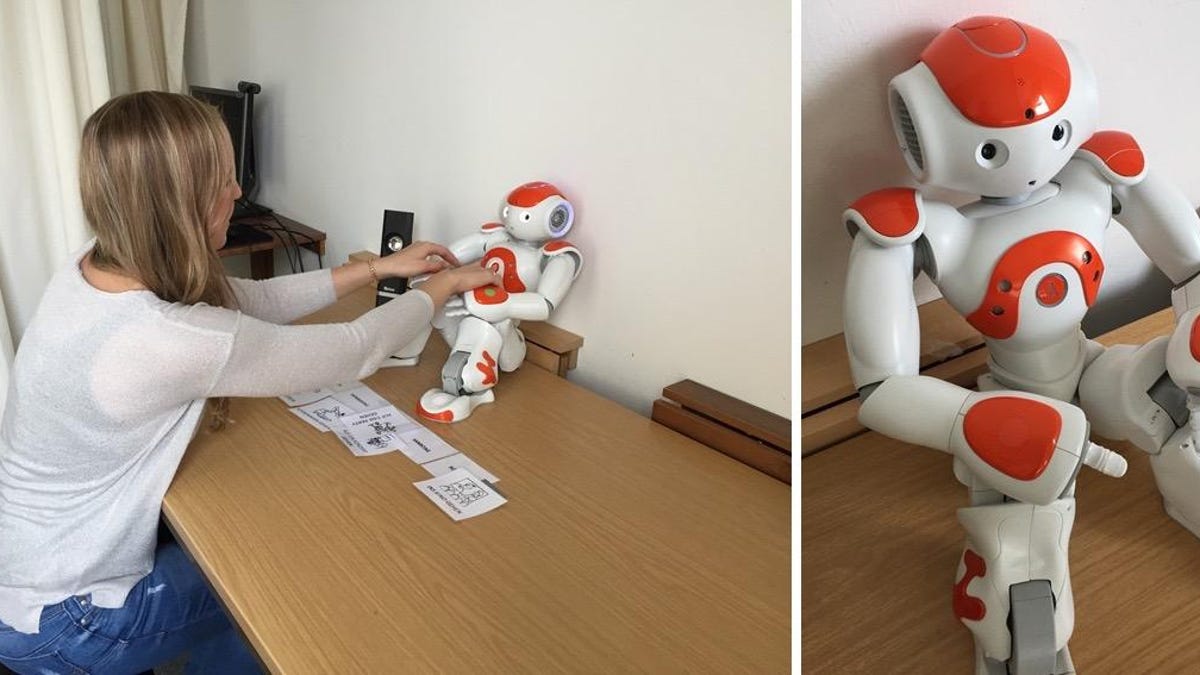

The volunteers (89 in total) for the study were asked to complete basic social tasks like answering questions about favorite foods and functional tasks like planning out a schedule, all with the help of the cute humanoid robot Nao. The volunteers were told that the various tasks would help improve the robot's learning algorithms.

But in reality, the real test was at the end when the volunteers had to turn off the robot, even as it pleaded with the humans not to.

When the Nao robot begged the volunteers with comments like "Please do not switch me off!" as well as telling them it was afraid of the dark, the final task of hitting the off button wasn't that easy to do.

In fact, even though Nao was programmed to only plead with half of the volunteers, 13 of those took pity on the robot and refused to turn it off. While the other volunteers who heard the robot beg took three times as long to decide whether or not to side with the robot.

"Triggered by the objection, people tend to treat the robot rather as a real person than just a machine by following or at least considering to follow its request to stay switched on," the study stated.

So if a robot's social skills and its objection discourage interactants from switching the robot off, what does that say about us as humans?

According to the study, the fact that we treat non-human media (like computers, and robots) as if they are human is an already-established phenomenon called "the media equation," established in 1996 by the psychologists Byron Reeves and Clifford Nas.

Their studies found that people are often polite to computers, and that we even treat computers with female voices differently than male-voiced computers.

More recent studies have also already determined that we tend to like robots who are social, so when the Nao robot in this new study proved its talent for small talk, the humans directed to switch it off had second thoughts when the robot itself protested.

However, that doesn't mean all future robots will be able to chit-chat their way out of being shut down for the day. The study doesn't think we have anything to fear from our empathy towards robots, but it does point out that we may have to get used to the idea of not being the only ones on the planet with likeable social skills.